AI Technology Explained

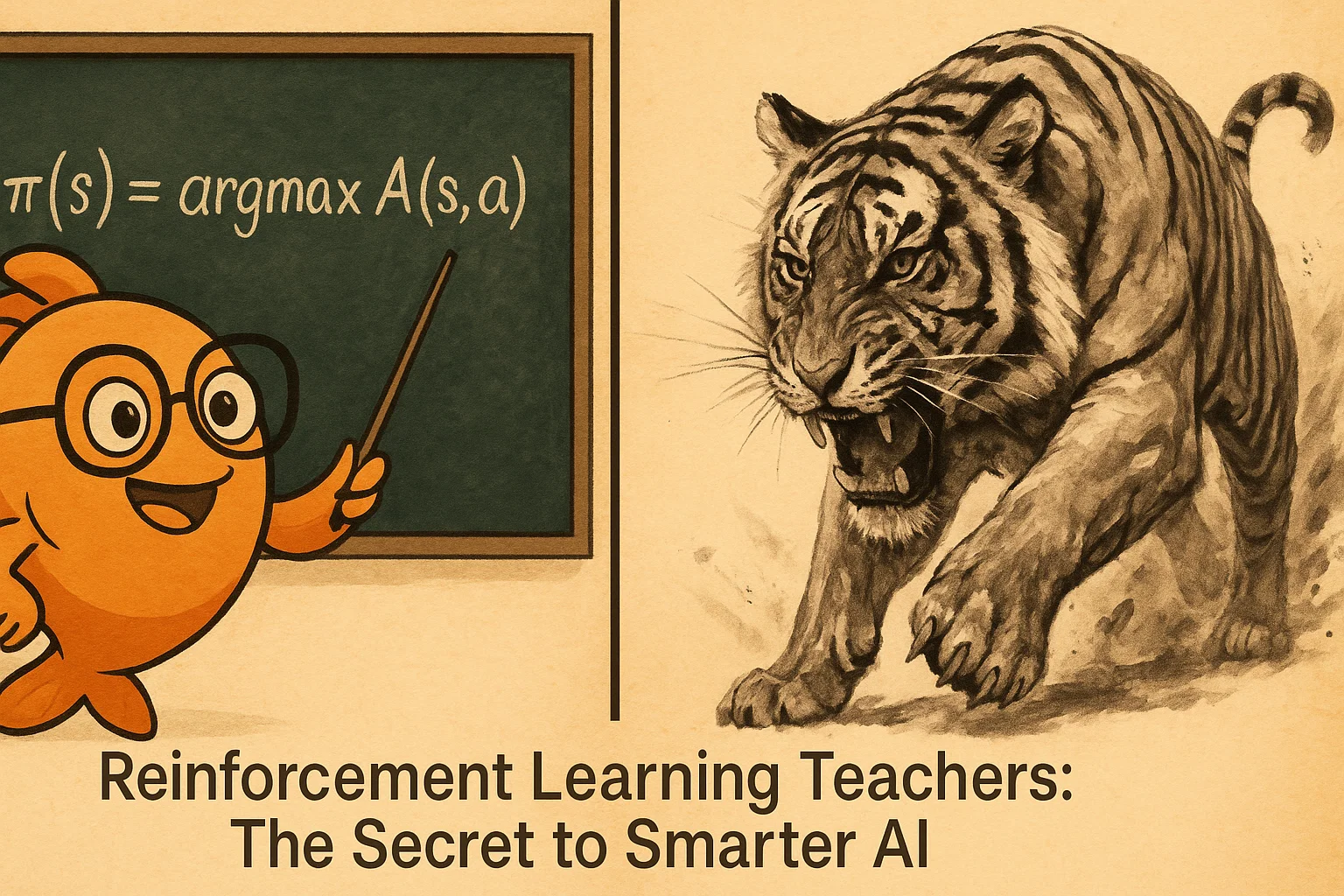

Reinforcement Learning Teachers: The Secret to Unlocking Cheaper, Smarter AI

The world of AI is moving at a breakneck pace, but what if we could make it even faster, cheaper, and more efficient? Sakana AI, the brilliant minds behind the self-improving Darwin Gödel Machine, are back with a potentially revolutionary paper that rethinks the very foundation of how we train AI models. Their latest open-source project introduces the concept of Reinforcement Learning Teachers (RLT), a paradigm shift that could unlock new frontiers for advanced and affordable AI.

This new method flips the traditional training process on its head. Instead of just teaching an AI to solve a problem, Sakana AI has taught an AI how to teach. The results are nothing short of surprising, showing that smaller, specialized AI teachers can impart deep reasoning skills even to much larger student models.

First, What is Reinforcement Learning (RL)?

Before diving into Sakana AI’s innovation, let’s quickly recap Reinforcement Learning (RL). Think of it like training a dog. In RL, you have:

- An agent (the AI model, or the dog).

- An environment (the problem or world it interacts with).

- Actions the agent can take.

- Rewards (or penalties) for those actions.

The agent performs actions and makes observations. When it does something that gets it closer to the desired goal, it receives a positive reward—like a virtual “good boy!” or a high-five. If it does something unhelpful, it might get a negative reward. The goal is for the agent to learn a strategy that maximizes its total rewards over time. This is the fundamental technique used to train AIs to do everything from playing games to writing code.

The Traditional Approach: “Learning to Solve”

Traditionally, advanced AI models are trained using a “Learning to Solve” method. Here, the AI model itself is the student. It’s given a complex task and learns through trial and error, reinforced by rewards for correct answers.

A great example mentioned in the past is GameNgen, an AI that learned to generate the game DOOM in real-time, not from code, but by “dreaming” it into existence. To gather the data for this, the creators used RL to train AI agents to play DOOM. The reward function looked something like this:

- Enemy Kill: +1,000 points

- Enemy Hit: +300 points

- Player Hit: -100 points

- Player Death: -5,000 points

The AI’s goal was simple: maximize its score by learning to play the game well. This process, while effective, can be slow, costly, and often results in models that are narrowly focused. They become very good at the specific tasks they were trained on but struggle to generalize their skills to broader applications.

Sakana AI’s Breakthrough: Reinforcement Learning Teachers (RLT)

Sakana AI’s new paper flips this paradigm. Instead of “Learning to Solve,” their method is all about “Learning to Teach.”

How RLT Flips the Script

In the RLT framework, the roles are redefined. You have a “teacher” model and a “student” model.

- The Teacher Knows the Answer: The teacher model isn’t trying to solve a problem from scratch. It is given both the question and the correct answer.

- The Goal is Explanation: The teacher’s primary task is to generate the best possible step-by-step explanation for how to arrive at the known solution.

- Reward is Based on Student Success: The teacher is rewarded based on how effectively its explanation helps a separate “student” model understand and solve the problem.

This creates a powerful feedback loop. The teacher is optimized not for solving, but for being helpful. This aligns the training with its true purpose: effectively transferring knowledge, much like an expert human educator.

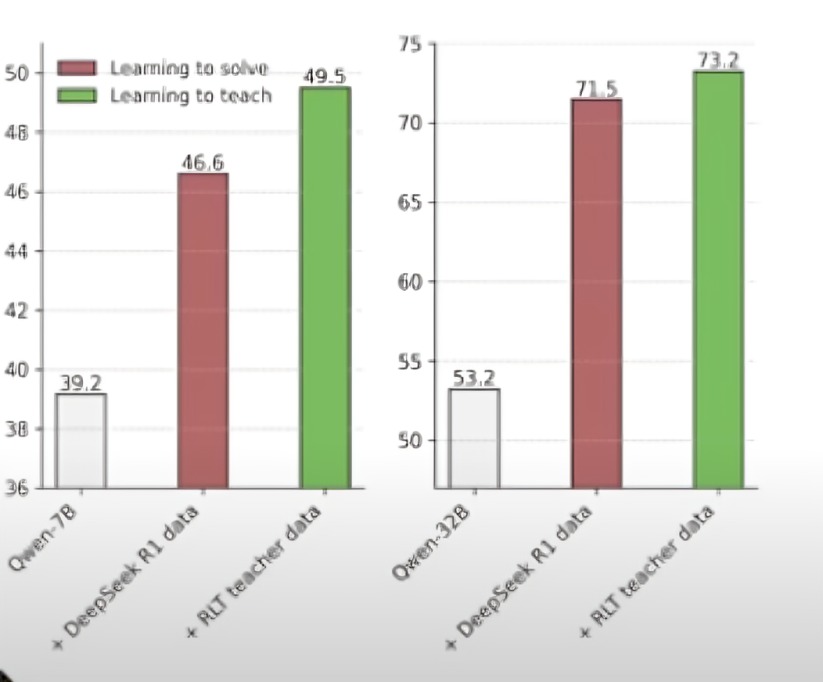

The Surprising Results: Smaller Teachers, Smarter Students

The results of this approach are astounding. The paper demonstrates that a compact, 7-billion-parameter RLT teacher model is better at teaching reasoning skills than orders-of-magnitude larger LLMs.

When tested against complex benchmarks like the American Invitational Mathematics Examination (AIME), these small, specialized teachers helped student models reach higher levels of performance than traditional RL training with massive, expensive models. For instance, training a 32B parameter student model with the RLT method took less than a day on a single compute node, whereas traditional RL would have taken months on the same hardware.

This makes advanced AI more affordable and much faster to train.

The Future: A New Frontier of More Advanced and Cheaper Reasoning Models

This work by Sakana AI points toward a future where we rethink how AI models are built. The RLT framework could disrupt the cost of training advanced models. Instead of relying on massive systems at every stage, we can train small, specialized teachers and use them to teach much larger models efficiently.

This flips the traditional scaling paradigm: the heaviest work (teaching) is handled by compact, affordable models that unlock powerful capabilities in the students they train. [SUGGESTED INTERNAL LINK: This could fundamentally change the future of AI and its development trends.]

Looking ahead, this framework even hints at something more intriguing: a model that can play both the teacher and student roles at once. By generating explanations for its own benefit, such a system could learn how to teach itself better over time. This idea echoes the vision of the Darwin Gödel Machine, where a model evolves through self-reflection and recursive learning.

Sakana AI has once again dropped a paper with massive implications. By making the code and methods open source, they’ve invited the entire community to explore this new frontier. As more labs adopt this “learning to teach” approach, we may be on the cusp of a true revolution in AI development.

AI Technology Explained

DeepSeek OCR: Discover the Ultimate Trick for AI Data Compression

In the ever-evolving world of artificial intelligence, efficiency is king. While major announcements often come with fanfare, some of the most groundbreaking innovations arrive quietly. The latest “DeepSeek moment” is a perfect example, introducing a technology that could fundamentally change how we feed information to large language models. This new frontier is called DeepSeek OCR, and it’s a powerful exploration into optical context compression that has massive implications for the future of AI.

What is DeepSeek OCR and How Does it Work?

At its core, DeepSeek OCR (Optical Character Recognition) is a new method for compressing visual information for LLMs. Instead of feeding a model pages and pages of text (which consumes a lot of tokens), this technology converts that text into an image. The model then processes this single image, which contains all the original information but in a highly compressed format.

The implications are staggering. According to the vLLM project, this method allows for blazing-fast performance, running at approximately 2500 tokens/s on an A100-40G GPU. It can compress visual contexts up to 20x while maintaining an impressive 97% OCR accuracy.

Unpacking the Performance Gains

A performance chart for the OmniDocBench benchmark tells a compelling story. The chart plots “Overall Performance” against the “Average Vision Tokens per Image.”

- Fewer Tokens, Better Performance: As you move to the right on the chart, the number of vision tokens used to represent an image decreases. As you move up, the overall performance gets better.

- DeepSeek’s Dominance: The various DeepSeek OCR models (represented by red dots) form the highest curve on the graph. This demonstrates they achieve the best performance while using significantly fewer vision tokens compared to other models like GOT-OCR2.0 and MinerU2.0.

Essentially, DeepSeek has found a way to represent complex information more efficiently, which is a critical step in overcoming some of AI’s biggest hurdles.

For more on how AI models are benchmarked, check out our articles in the AI Technology Explained category.

Why Image-Based Compression is a Game-Changer

Think of it like a meme. Using a single image, like the popular Drake format, we can convey a lot of information—emotion, cultural context, humor—that would otherwise take many paragraphs of text to explain. An image acts as a dense packet of information.

This is exactly what DeepSeek OCR is proving. We can take a large amount of text, which would normally consume thousands of tokens, render it as an image, and feed that single image to a Vision Language Model (VLM). The result is a massive compression of data without a significant loss of meaning or “resolution.”

Solving Core AI Bottlenecks

This efficiency directly addresses several major bottlenecks slowing down AI progress:

- Memory & Context Windows: AI models have a limited “memory” or context window. As you feed them more and more information (tokens), they start to forget earlier parts of the conversation. By compressing huge amounts of text into a single image, we can effectively expand what fits into this window, allowing models to work on larger projects and codebases without performance degradation.

- Training Speed & Cost: Training AI models is incredibly expensive and time-consuming, partly due to the sheer volume of data they need to process. By compressing the training data, models can be trained much faster and cheaper. This is especially crucial for research labs that may not have access to the same level of GPU resources as major US companies.

- Scaling Laws: Increasing a model’s context window traditionally comes at a quadratic increase in computational cost. This new visual compression method offers a way to bypass that limitation, potentially leading to more powerful and efficient models.

Expert Insight: Andrej Karpathy on Pixels vs. Text

The significance of this paper wasn’t lost on AI expert Andrej Karpathy. In a post on X, he noted that the most interesting part of the DeepSeek OCR paper is the fundamental question it raises: “whether pixels are better inputs to LLMs than text.”

Karpathy suggests that text tokens might be “wasteful and just terrible” at the input stage. His argument is that all inputs to LLMs should perhaps only ever be images. Even if you have pure text, it might be more efficient to render it as an image first and then feed that into the model.

This approach offers several advantages:

- More Information Compression: Leads to shorter context windows and greater efficiency.

- More General Information Stream: An image can include not just text, but bold text, colored text, and other visual cues that are lost in plain text.

- More Powerful Processing: Input can be processed with bidirectional attention by default, which is more powerful than the autoregressive method used for text.

Karpathy concludes that this paradigm shift means “the tokenizer must go,” referring to the clunky process of breaking words into tokens, which often loses context and introduces inefficiencies.

You can read Andrej Karpathy’s full thoughts on his X (Twitter) profile.

A New Blueprint for AI

The work on DeepSeek OCR provides more than just a faster way to process documents; it offers a blueprint for a new kind of biological and informational discovery. By leveraging visual modality as an efficient compression medium, we open up new possibilities for rethinking how vision and language can be combined. This could dramatically enhance computational efficiency in large-scale text processing and agent systems, accelerating everything from financial analysis to the discovery of new cancer therapies. The future of AI might just be more visual than we ever imagined.

AI Technology Explained

What is Machine Learning? The Ultimate Guide to AI’s Core

Ever wondered what is machine learning and how it powers everything from your YouTube recommendations to complex chatbots? You’ve likely got a basic idea: it’s the tech that learns your preferences. But how does it really work, and how does it relate to Artificial Intelligence (AI) and Deep Learning? Let’s break it down into simple, easy-to-understand concepts.

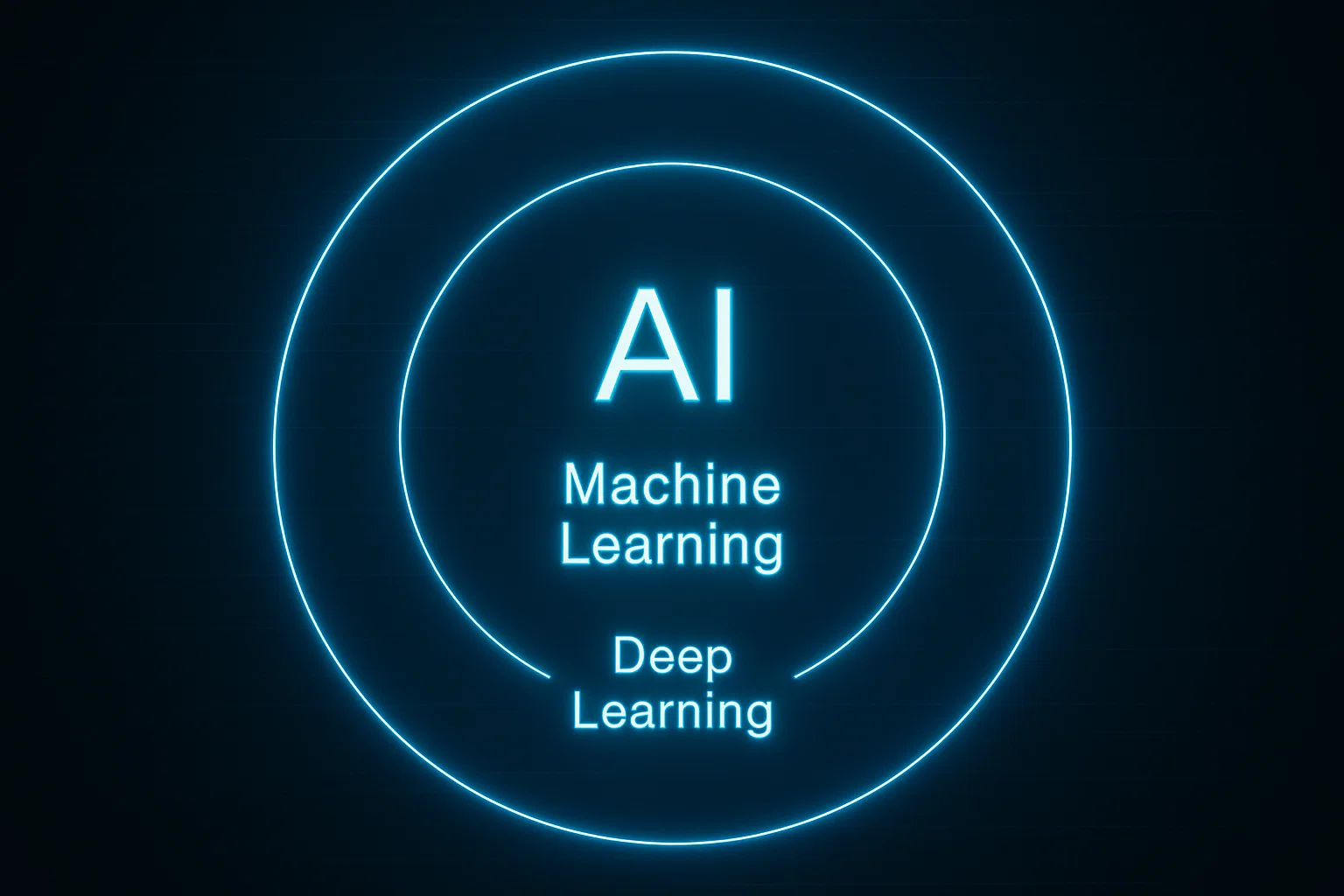

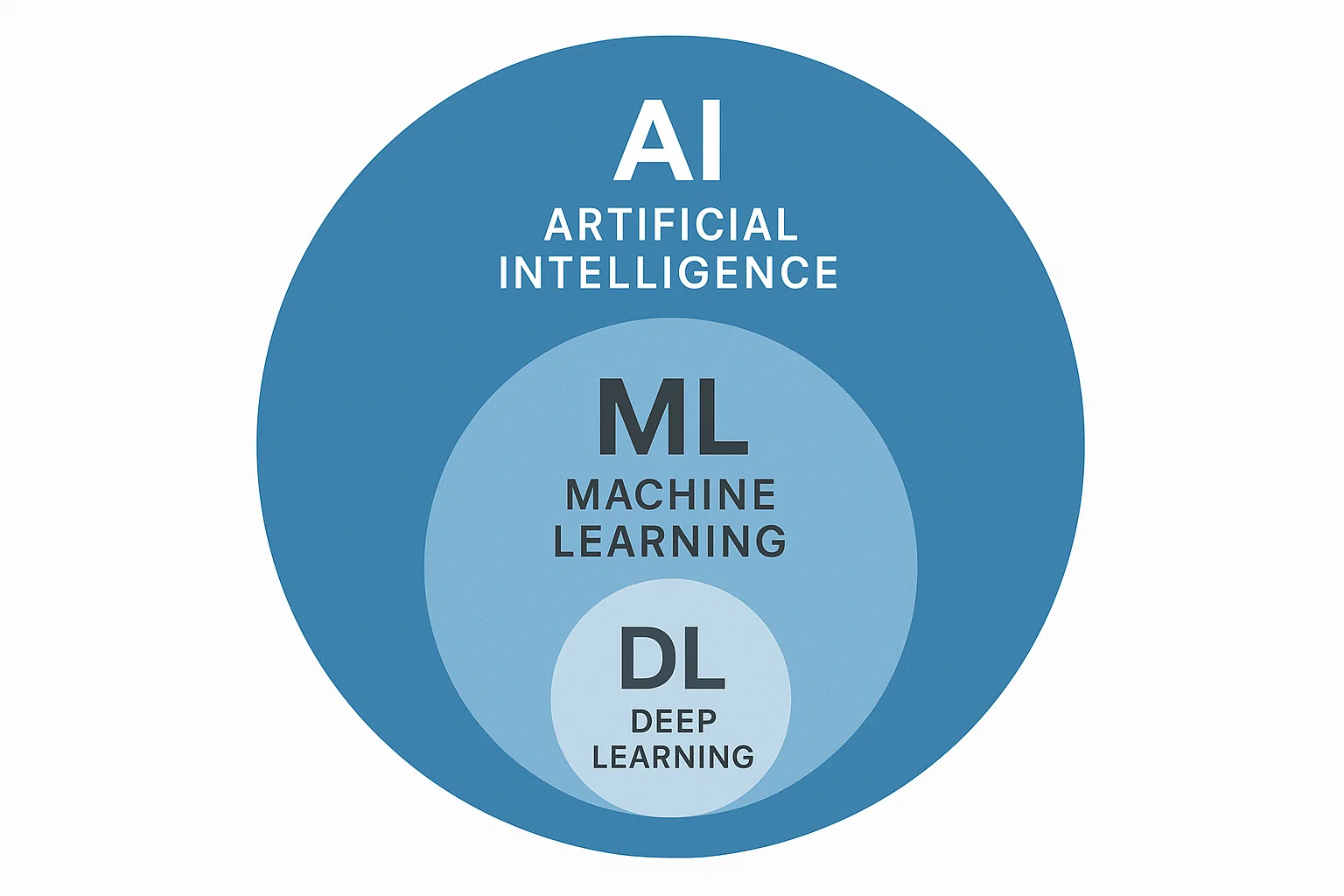

The Hierarchy: AI, Machine Learning (ML), and Deep Learning (DL)

A common point of confusion is whether AI and Machine Learning are the same thing. The simple answer is no; it’s a hierarchy.

- Artificial Intelligence (AI): This is the broadest concept, representing the entire field of making machines intelligent.

- Machine Learning (ML): This is a subset of AI. ML focuses specifically on algorithms that can learn from patterns in training data to make accurate predictions or decisions about new, unseen data. Instead of being explicitly programmed with hard-coded instructions, the machine learns through pattern recognition.

- Deep Learning (DL): This is a subset of Machine Learning. It uses complex structures called neural networks with many layers to learn hierarchical representations of data.

Think of it as a set of Russian nesting dolls: AI is the largest doll, you open it to find the ML doll, and inside that, you find the DL doll.

How Machines Learn: Training vs. Inference

The central idea of machine learning is that if you optimize a machine’s performance on a set of tasks using data that resembles the real world, it can make accurate predictions on new data. This process involves two key stages:

- Model Training: This is the learning phase. A model is fed a large amount of “training data.” The algorithm analyzes this data, identifies patterns, and learns the rules on its own. For example, it might learn to distinguish between pictures of cats and dogs by analyzing thousands of labeled images.

- AI Inference: This is the application phase. Once the model is fully trained, it can be deployed. When you feed this trained model new data, it uses the patterns it learned to “infer” an output or make a prediction. This is where the model actually runs and performs its task.

Essentially, a trained model is simply applying the patterns it learned from training data to make an intelligent guess about the correct output for a real-world task.

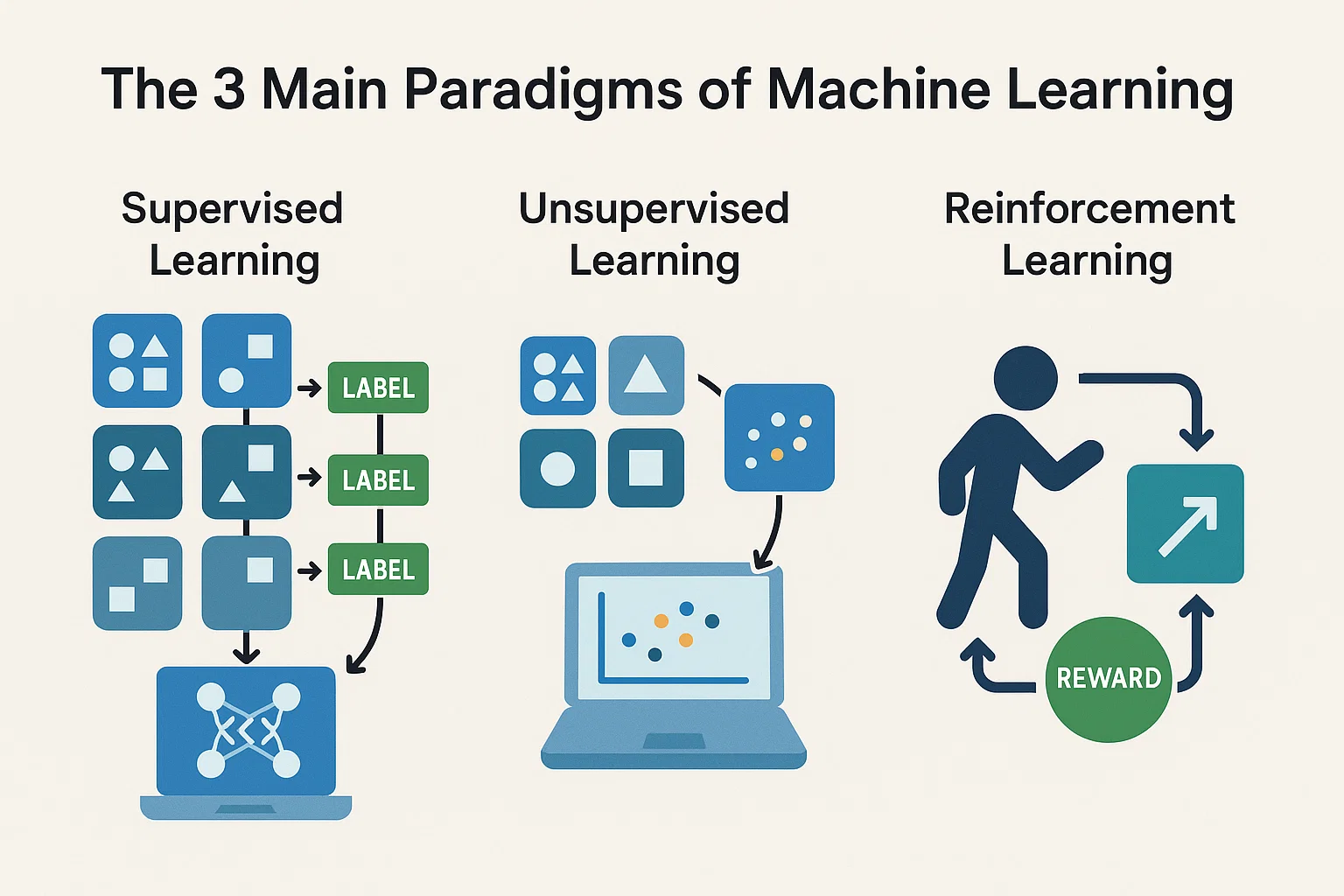

The 3 Main Paradigms of Machine Learning

Most machine learning approaches can be grouped into three primary learning paradigms. Understanding these helps clarify how different AI systems are built.

1. Supervised Learning

This is the most common type of machine learning. In supervised learning, the model is trained on a dataset where the “correct” answers are already known. This data is “labeled.” For instance, a dataset of emails might be labeled as either “Spam” or “Not Spam.” The model learns the features of each category to classify new, unlabeled emails. It’s called “supervised” because it often requires a human to provide the initial labeled data (the ground truth).

Supervised learning is typically used for two types of tasks:

- Classification: Used for predicting discrete classes. For example, is this email spam or not? This can be Binary (two options), Multi-Class (predicting one of many categories), or Multi-Label (assigning multiple tags to one item).

- Regression: Used for predicting continuous numerical values. Examples include predicting a house price, forecasting temperature, or estimating future sales.

For a deeper dive into how these models are built, check out our guides in AI How-To’s & Tricks.

2. Unsupervised Learning

Unlike supervised learning, unsupervised learning works with unlabeled data. The goal here is for the model to discover hidden structures and patterns on its own, without any pre-existing “correct answers.”

Common tasks for unsupervised learning include:

- Clustering: Grouping similar data points together. For example, segmenting customers into different groups based on their purchasing behavior.

- Dimensionality Reduction: Reducing the number of variables (features) in a dataset while retaining the important information. This simplifies the data for easier processing and visualization.

3. Reinforcement Learning

Reinforcement Learning is a fascinating paradigm where a model (or “agent”) learns by interacting with an environment. It’s based on a system of trial and error.

- The agent observes the current State of the environment.

- It chooses an Action to perform.

- It receives a Reward for a good action or a Penalty for a bad one.

Over time, the agent learns a “policy” or strategy that maximizes its long-term rewards. A perfect example is a self-driving car. It gets rewarded for staying in its lane and obeying traffic signals but gets penalized for hard braking or, even worse, collisions. This feedback loop helps it learn to navigate the world safely and efficiently.

The evolution of this technique has led to breakthroughs you can read about in our Future of AI & Trends section.

From Classic ML to Modern AI

While many of these concepts, like regression and clustering, have been around for years and are still fundamental to business analytics, they also form the bedrock of today’s most advanced AI. Modern marvels like Large Language Models (LLMs) are built on top of an architecture called a Transformer, but they still rely on the core principles of pattern recognition, model training, and inference.

Even Reinforcement Learning has seen a resurgence with RLHF (Reinforcement Learning with Human Feedback), a technique used to fine-tune LLMs to better align with human preferences. This just goes to show that human learning continues to find incredible new ways to apply and scale the foundational concepts of machine learning.

To understand the architecture powering modern LLMs, you can explore the original Google Research paper on Transformers here.

Future of AI & Trends

AI for Language Learning: Discover the Ultimate Trick to Study Smarter

Are you struggling to find engaging materials for your language studies? The old methods of relying solely on textbooks are being revolutionized by the power of AI for language learning. In this guide, we’ll reveal an incredible technique that turns any online video—whether it’s on YouTube, Vimeo, or another platform—into a comprehensive, interactive lesson. This method, ideal for both teachers and students, leverages two powerful AI tools to create transcripts, translations, vocabulary lists, and even quizzes, making your study sessions more effective and dynamic than ever before.

The Game-Changing Tools for AI Language Study

The speaker in the video, a polyglot currently learning his fourth language (Polish), explains that his study methods have completely changed thanks to new AI technologies. He highlights two specific tools that he uses constantly. The criteria for choosing these tools are simple but powerful:

-

- Free Option: Both tools offer a robust free version, making them accessible to everyone.

-

- Multi-Language Support: They aren’t just for English; you can use them for Polish, French, Spanish, German, and many other languages.

-

- Versatile: They are perfect for both language teachers creating materials and students studying independently.

The first and most foundational tool in this process is an AI transcription service called TurboScribe.ai.

Step 1: Transcribe Any Video in Seconds with TurboScribe.ai

TurboScribe is a fantastic AI tool that converts audio and video into accurate text transcripts. The free plan allows you to transcribe up to three files per day, each up to 30 minutes long—more than enough for most study sessions.

Here’s how to use this powerful tool for your language learning journey:

How to Get Your Transcript

-

- Find a YouTube video (or any other online video) in the language you’re studying. It could be a news report, a vlog, or a tutorial.

-

- Copy the URL of the video.

-

- Go to TurboScribe.ai and sign up for a free account.

-

- In your dashboard, click “Transcribe Files” and then select the “Import from Link” option.

-

- Paste the video URL, choose the audio language, and click “Transcribe.”

Within a few minutes, TurboScribe will process the video and provide you with a full, accurate transcript. Not only that, but it also extracts the audio, allowing you to listen along as the text is highlighted word-for-word. This is an excellent feature for improving listening skills and pronunciation. Once the transcript is ready, you can download it as a PDF, TXT, or DOCX file.

Step 2: Create Custom Learning Materials with an LLM (like Gemini or ChatGPT)

Now that you have your transcript as a DOCX or TXT file, the real magic begins. This is where you can leverage a Large Language Model (LLM) like Google Gemini or ChatGPT to turn that raw text into tailored study materials. The video demonstrates this using Google Gemini’s ability to upload files directly.

From Transcript to Interactive Lesson

After uploading your downloaded transcript file into your chosen AI chat tool, you can give it specific prompts to generate exercises. This is a core trick for advanced AI for language learning. Try asking for things like:

-

- “Based on this transcript, can you create a list of 8 key vocabulary words I need to learn to understand it?”

-

- “Can you make 5 multiple-choice comprehension questions based on the text?”

-

- “Provide a one-paragraph summary of this transcript.”

-

- “Extract all the phrases related to ordering food from this text.”

The AI will instantly analyze the text and provide exactly what you asked for. You can create vocabulary lists, true/false questions, gap-fill exercises, or summaries to test your understanding. You can then take these materials and study them before going back to watch the original video again, reinforcing what you’ve learned. It’s a powerful feedback loop for rapid improvement.

Bonus Tip: Use Google Translate’s “Saved” Feature

As you work through your transcript and generated vocabulary, you can use Google Translate to look up individual words or phrases. But did you know you can create a personal phrasebook? Simply click the star icon next to any translation to save it to your list. This creates a custom set of flashcards you can review anytime, complete with audio playback to practice pronunciation. This is yet another simple but effective way to use AI for language learning in your daily routine.

By combining these free AI tools, you can create a nearly infinite supply of high-quality, relevant, and engaging learning materials from content you already enjoy watching. Give it a try and discover a smarter way to study!

-

AI News & Updates7 months ago

AI News & Updates7 months agoDeepSeek R1-0528: The Ultimate Open-Source AI Challenger

-

AI How-To's & Tricks7 months ago

AI How-To's & Tricks7 months agoAI Video Generators: Discover the 5 Best Tools (Free & Paid!)

-

AI News & Updates7 months ago

AI News & Updates7 months agoClaude Opus 4: The Shocking Truth Behind Anthropic’s Most Powerful AI Yet

-

AI How-To's & Tricks7 months ago

AI How-To's & Tricks7 months agoFaceless AI Niches: 12 Ultimate Ideas to Dominate Social Media in 2025

-

AI How-To's & Tricks7 months ago

AI How-To's & Tricks7 months agoKling AI 2.0: An Incredible Leap? Our Exclusive Review & Tests

-

AI How-To's & Tricks7 months ago

AI How-To's & Tricks7 months agoGoogle Gemini for Language Learning: 3 Secret Tricks to Accelerate Your Progress.

-

AI News & Updates7 months ago

AI News & Updates7 months agoBohrium AI: The Ultimate Free Tool for Academic Research

-

AI How-To's & Tricks7 months ago

AI How-To's & Tricks7 months agoFree AI Video Generator: Discover The Ultimate Veo 3 Alternative