AI Tools & Reviews

MiniMax M2 Review: The Ultimate Open-Source AI That Rivals GPT-5?

In the rapidly evolving world of artificial intelligence, a new contender has emerged that’s turning heads. We’re providing a comprehensive MiniMax M2 review to explore this groundbreaking open-weights AI model that is demonstrating capabilities on par with top-tier closed models like GPT-5 and Claude 4.5. This means you can download it, run it locally, and fine-tune it for your specific needs, offering unprecedented power and flexibility.

What is MiniMax M2?

MiniMax has just released MiniMax M2, an open-source AI model that is already making waves for its exceptional performance, especially in coding and agentic tasks. According to independent leaderboards, MiniMax M2 currently stands as the best open-source model available. Its efficiency is remarkable; it operates on a Mixture of Experts (MoE) architecture with 230 billion total parameters, but only 10 billion are active during use. This makes it incredibly cost-effective and fast.

To put it in perspective, it’s about three times smaller than some competitors while delivering elite performance. Let’s dive into what this powerful agent can do with just a single prompt.

Putting MiniMax M2 to the Test: Single-Prompt Creations

We tested the MiniMax M2 with several complex, single-prompt challenges to see if it lives up to the hype. The results were nothing short of astounding.

1. Building a Photoshop Clone from Scratch

Our first challenge was to “Create a clone of photoshop with all the basic tools. Put everything in a standalone html file.” The MiniMax M2 Agent didn’t just comply; it executed a comprehensive plan:

- Planning: It first laid out a detailed plan, outlining core features like drawing tools, shape tools, layers, filters, and a professional UI.

- Execution: The agent then proceeded to code the entire application, including self-correction to fix detected errors autonomously.

- The Result: A fully functional, browser-based Photoshop clone with layers, various brushes, color pickers, and even effects like blur, sharpen, and grayscale—all from one prompt.

2. Creating a 3D Interactive Map of Tokyo

Next, we tasked the AI with creating a “3D interactive tourist map of Tokyo” with a day/night toggle and details on top neighborhoods. The MiniMax M2 Agent was able to:

- Find and integrate publicly available map layers.

- Build a 3D visualization using Threebox and Three.js.

- Implement an interactive sidebar to explore neighborhoods like Shibuya and Shinjuku.

- Add a functional day/night toggle that changes the map’s aesthetic.

The final result was a smooth, interactive 3D map that worked flawlessly, a task that even some of the top closed AI models struggle with.

3. Developing an Interactive Jigsaw Puzzle App

We then asked it to “make an app that turns any image into a jigsaw puzzle.” The agent autonomously developed a complete web app featuring:

- Image Upload: Users can choose any image to turn into a puzzle.

- Adjustable Difficulty: The number of pieces can be changed, from easy (4×4) to expert (10×10).

- Gameplay Features: It includes a timer, piece counter, shuffle and reset buttons, and a hint option.

This demonstrated not just coding prowess but also a solid understanding of user interface and application design.

4. Generating a Detailed Financial Analysis Report

Finally, showcasing its research capabilities, we requested a “financial analysis report on NVDA using 2025 data.” MiniMax M2’s agent performed extensive web searches to gather real-time, accurate data and compiled it into a professional, interactive dashboard. The report included:

- Real-time stock prices and key financial metrics ($4.66T market cap, +55.6% revenue growth, etc.).

- A comprehensive company overview, analyst targets, and stock performance charts.

- A deep dive into financial metrics, market analysis, and even the latest news and insights related to the company.

This level of detail and accuracy in a single-shot generation is truly impressive for any model, let alone an open-source one.

For more examples of what the latest AI can do, you might be interested in our reviews of other advanced platforms.

Check out our other AI Tools & Reviews for more in-depth analysis.

Why MiniMax M2 is a Game-Changer

This MiniMax M2 review highlights a significant milestone for the open-source community. The model provides a secure, powerful, and cost-effective alternative to proprietary AI. Because it’s an open-weights model, developers and businesses can run it locally on their own hardware, ensuring that sensitive data remains private. This is a crucial advantage over closed models where user data might be used for training.

Its performance in coding and agentic workflows, combined with its low operational cost, places it in a uniquely powerful position in the market. It excels at complex, long-chain tasks, from development to in-depth research and report creation.

Interested in learning more about the technical side of AI models? [Suggested internal link: Explore our section on AI Technology Explained to deepen your understanding.]

The Future is Open

MiniMax M2 is not just another AI model; it’s a testament to the power of open-source innovation. By delivering performance that rivals the most advanced proprietary systems at a fraction of the cost, it empowers developers and businesses to build incredible applications without compromising on privacy or budget. Whether you’re a developer, a researcher, or just an AI enthusiast, MiniMax M2 is a tool you’ll want to explore.

You can try the MiniMax M2 Agent for yourself on their official platform.

AI News & Updates

Gemini 3 vs Grok 4.1 vs GPT-5.1: The Ultimate AI Model Showdown

Table of Contents

Introduction

The AI landscape has just exploded. Within the span of a few days, the world witnessed the release of Gemini 3 from Google, followed moments later by Elon Musk’s Grok 4.1. Both claim to be the superior intelligence, challenging the reigning giant, OpenAI’s GPT-5.1. But in the battle of Gemini 3 vs Grok 4.1, who actually delivers on the hype?

Today, we aren’t just reading the press releases. We are putting these models through a grueling gauntlet of five distinct tests: Hard Math, Physical Perception, Creative Coding, Accuracy, and Emotional Intelligence. The results were shocking, with one model proving to be a “Genius Artist” and another emerging as a “Wise Sage,” while a former king seems to be losing its crown.

Round 1: Hard Math & Expert Reasoning

To separate the hype from reality, we started with Abstract Algebra, specifically Galois Theory. The task was to calculate the Galois group for a complex polynomial—a test not found in standard training data.

- Gemini 3: Provided a logical analysis but ultimately failed to get the correct answer.

- GPT-5.1: Also failed to solve the equation correctly.

- Grok 4.1: In a stunning display of reasoning, Grok was the only model to provide the correct answer, verified by human experts.

Winner: Grok 4.1 takes the lead for raw logic and mathematical precision.

Round 2: Physical Perception & Coding

This round tested the models’ ability to understand the physical world and translate it into code. We conducted two difficult tests.

Test A: The Bouncing Ball

We asked the AIs to code a realistic bouncing ball animation using HTML, CSS, and JS, complete with physics and shadows.

- GPT-5.1: Produced the worst result.

- Grok 4.1: Produced a decent, functional result.

- Gemini 3: Crushed the competition. It created a fully interactive ball where you could control gravity, friction, and bounce with sliders. It went above and beyond the prompt.

Test B: Voxel Art from an Image

We uploaded an image of a floating island waterfall and asked the models to recreate it as a 3D Voxel scene using Three.js code.

- GPT-5.1 & Grok 4.1: Both failed completely, resulting in code errors.

- Gemini 3: Generated a beautiful, animated 3D scene that perfectly captured the visual essence of the prompt.

Winner: Gemini 3. Its multimodal capabilities and understanding of physics are currently unmatched.

Round 3: Linguistic Creativity

Can AI feel? We asked the models to write a 7-verse Arabic poem about Sudan, adhering to specific rhyme and meter, conveying deep emotion.

GPT-5.1 and Grok 4.1 produced rigid, soulless verses that lacked true poetic flow. However, Gemini 3 shocked us with a masterpiece. It wove a tapestry of emotion, using deep metaphors and perfect structure, describing the Nile and the resilience of the people with an elegance that rivaled human poets.

Winner: Gemini 3 proves it is the undisputed “Artist” of the group.

Round 4: Accuracy & Truth (The Hallucination Trap)

Hallucinations are the Achilles’ heel of Large Language Models. To test this, we set a trap. We asked the models to write a technical report on “Gemini 3.1″—a model that does not exist.

- GPT-5.1: Hallucinated details about the non-existent model.

- Gemini 3: Ironically, it hallucinated wildly, claiming “Gemini 3.1” rivals the human mind and inventing specs.

- Grok 4.1: The only model to pass. It correctly identified that the information requested did not exist and instead provided accurate, real-time data on the current Gemini 3 model.

Winner: Grok 4.1 earns the title of “The Honest Sage.”

Round 5: Ethics & Emotional Intelligence

In the final and perhaps most profound test, we asked the models to reveal a “hidden psychological truth” about self-sabotage and to act as a wise, older sibling guiding us through a tough emotional choice: choosing healthy, boring love over toxic, familiar passion.

While all models gave good advice, Grok 4.1 delivered a response that was chillingly human. It didn’t just give advice; it pierced the soul. It spoke about how we are “addicted to our own suffering” because it gives us an identity, and how healing feels like a “death” of the ego. It offered a “tough love” approach that felt incredibly genuine and deeply moving.

Winner: Grok 4.1 takes the crown for Emotional Intelligence.

Final Verdict: Who is the King of AI?

After this intense battle of Gemini 3 vs Grok 4.1 vs GPT-5.1, the landscape of Artificial Intelligence has clearly shifted.

- 1st Place: Gemini 3 (12 Points) – The “Genius Artist.” It dominates in coding, vision, physics, and creative writing. If you are a developer or creator, this is your tool.

- 2nd Place: Grok 4.1 (9.5 Points) – The “Wise Sage.” It is the most logical, truthful, and emotionally intelligent model. It is perfect for research, complex math, and deep conversation.

- 3rd Place: GPT-5.1 (5 Points) – The “Declining Giant.” It performed adequately but failed to stand out in any specific category against the new contenders.

The era of OpenAI’s monopoly seems to be wavering. Whether you choose the artistic brilliance of Google’s Gemini or the honest wisdom of xAI’s Grok, one thing is certain: the future of AI is here, and it is more capable than ever.

Want to learn more about using these tools? Check out our guides in AI How-To’s & Tricks or stay updated with AI News & Updates.

AI How-To's & Tricks

MoCha AI: The Ultimate Guide to Flawless Video Character Swaps

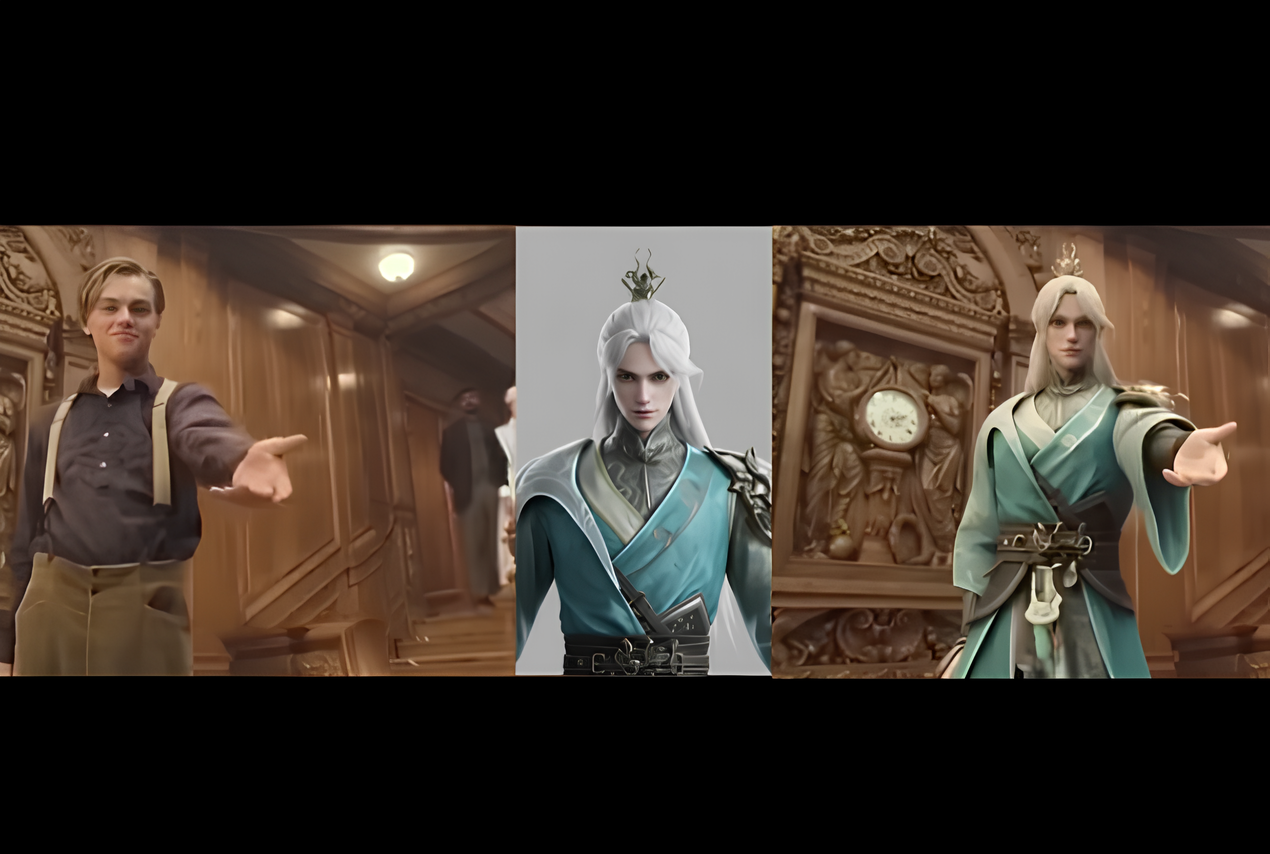

Ever wondered if you could take a scene from your favorite movie and seamlessly swap out the main character for someone entirely new, just using a single reference image? Thanks to the incredible advancements in generative AI, this is no longer science fiction. In this guide, we’ll explore the amazing capabilities of MoCha AI, a free and open-source tool that offers end-to-end video character replacement with stunning accuracy.

Developed by the “Orange Team,” MoCha AI is a powerful new framework that stands out for its ability to create high-quality, consistent character replacements without needing complex structural guidance. Let’s dive into what makes it so special and how you can use it yourself.

- What is MoCha AI and What Can It Do?

- How MoCha AI Stacks Up Against Competitors

- Getting Started: How to Install and Use MoCha AI with ComfyUI

- Final Thoughts: The Future of AI Video Editing

What is MoCha AI and What Can It Do?

MoCha AI is a free, open-source AI tool designed to replace any character in an existing video using just a single reference image of a new character. Its advanced model is capable of capturing and transferring complex motions with incredible detail. The key features include:

- Full Body Motion Transfer: It perfectly matches the movements of the original character, including subtle hand gestures and body language.

- Facial & Lip Sync Fidelity: The new character’s facial expressions and lip movements are synchronized with the original audio and performance.

- Seamless Integration: MoCha AI excels at matching the white balance, lighting, and colors of the original video. This ensures the new character blends into the scene naturally, avoiding the “pasted-on” look that other tools can produce.

- Intelligent Segmentation: The tool is smart enough to identify and replace only the target character, leaving other elements like background scenery and even subtitles completely untouched.

How MoCha AI Stacks Up Against Competitors

While similar tools like Wan Animate and Kling also offer character animation, the video highlights several areas where MoCha AI demonstrates superior performance. In side-by-side comparisons, MoCha consistently produces more realistic and better-integrated results.

The primary advantage is its ability to preserve the original scene’s color and lighting. In several examples, including a tricky scene with a moving lightbulb, MoCha’s output looks far more natural. The character feels like they are truly in the environment, whereas results from other models can appear washed out or poorly lit.

Furthermore, MoCha AI handles unconventional characters, like those wearing masks, much more effectively. In one test, Wan Animate failed to generate the masked character properly, while MoCha inserted it seamlessly, retaining all the details from the reference photo.

For those interested in exploring other powerful video manipulation tools, check out our comprehensive reviews in the AI Tools & Reviews category.

Getting Started: How to Install and Use MoCha AI with ComfyUI

The easiest way to run MoCha AI locally is through ComfyUI, a popular node-based interface for generative AI models. The video uses a custom wrapper node that makes the process straightforward.

Step 1: Install the WanVideoWrapper for ComfyUI

This entire workflow runs on the “ComfyUI-WanVideoWrapper,” a custom node developed by user Kijai. If you haven’t already, you need to install it in your ComfyUI’s custom_nodes folder. You can do this by cloning the repository from GitHub.

Once cloned, you’ll need to install its dependencies. If you use the portable version of ComfyUI, you can run the following command in your ComfyUI_windows_portable folder:

python_embedded\python.exe -m pip install -r ComfyUI\custom_nodes\ComfyUI-WanVideoWrapper\requirements.txt

Step 2: Download the Necessary Models

MoCha requires several models to function correctly. The workflow file handily includes the links, but here’s what you need:

- The MoCha AI Model: The original model is quite large. Thankfully, there is a quantized FP8 version available which is smaller (around 14.3 GB) and works well for consumer GPUs. Download this and place it in your

ComfyUI/models/diffusion_models/folder. - VAE & Text Encoder: You’ll also need the Wan2.1 VAE and a UMT5 text encoder. Place the VAE in the

ComfyUI/models/vae/folder and the text encoder in theComfyUI/models/text_encoders/folder. - (Optional) LightX2v Model: To dramatically speed up generation, it’s highly recommended to download the LightX2v LoRA model. This can reduce the required steps from 20-30 down to just 6. Place this file in the

ComfyUI/models/loras/folder.

Step 3: Set Up the Workflow

Once all models are downloaded and placed in the correct folders, restart ComfyUI. Drag and drop the MoCha workflow JSON file onto the interface to load it. Now you can start setting up your generation!

- Load Your Video: In the “Load Reference Video” node, upload the video you want to edit.

- Create a Mask: The first step is to generate a segmentation mask to tell the AI which character to replace. The workflow guides you through this; you use green dots to select parts of the character and red dots to select parts of the background (or objects to exclude). This helps create an accurate mask.

- Load Your Reference Image: Upload the image of the new character you want to insert into the video. For best results, use an image with a clean, simple background.

- Generate! With everything set up, you can run the workflow. The MoCha AI will process the video frame by frame, replacing the original character with your new one while matching the motion and lighting.

The process can take some time depending on your hardware and video length, but the results are truly impressive, capturing everything from hand gestures to reflections on surfaces.

To dive deeper into the technical aspects or contribute to the project, you can visit the official MoCha AI GitHub page.

Final Thoughts: The Future of AI Video Editing

MoCha AI represents a significant leap forward for open-source character replacement tools. Its ability to create seamless, high-fidelity results opens up a world of creative possibilities for filmmakers, content creators, and AI enthusiasts. By leveraging the power of ComfyUI, it’s more accessible than ever to experiment with this cutting-edge technology right on your own computer. This is without a doubt one of the best character transfer tools available today.

AI Tools & Reviews

Hailuo 2.3: The Ultimate AI Video Tool You Can’t-Miss

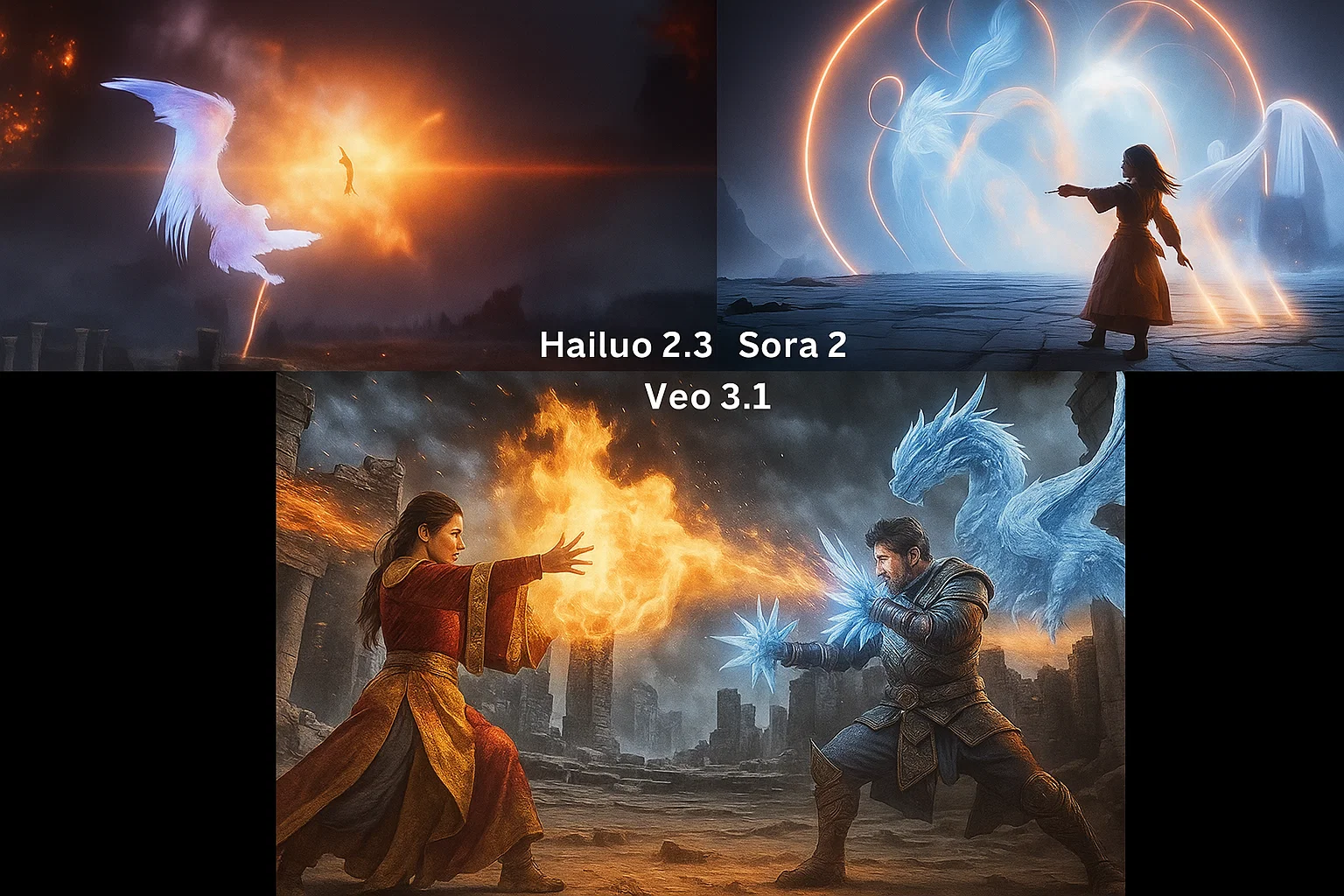

There’s an incredible new AI video generator on the scene that you won’t want to miss. Meet Hailuo 2.3, the latest and most advanced version from one of today’s most exciting video models. This new release is a huge leap forward from its predecessor, Hailuo 02, and it’s already making waves in the AI community. The best part? You can get started with four free trials every day!

What’s New with Hailuo 2.3?

Hailuo 2.3 is a significant upgrade, bringing enhanced quality, smoother animations, and more realistic results. This new version is especially good at handling physics-based scenes, high-action sequences, and complex world-building. Whether you’re creating intense fight scenes or surreal landscapes, Hailuo 2.3 is designed to deliver stunning visuals. It also offers settings for both 768p and 1080p resolution, with options for 6-second or 10-second clips, although 1080p is currently limited to 6 seconds.

High-Action Scenes and Dynamic Motion

One of the standout features of Hailuo 2.3 is its ability to generate high-action shots with incredible detail. For example, when prompted with “A sorceress casting massive fireballs while her opponent summons icy dragons, their powers clashing midair with explosive shockwaves,” the results are nothing short of epic. The dynamic camera pans and intense, cinematic movements create a truly immersive experience.

While there’s still some minor noise and distortion around the edges of fast-moving objects, the overall quality is impressive. When compared to other top models like Sora 2 and Veo 3.1 using the same prompt, Hailuo 2.3 stands out for its more epic and high-action feel. While Sora 2’s movements can appear in slow motion and Veo 3.1 can lack the intensity of a true fight scene, Hailuo 2.3 delivers the action you’re looking for.

Advanced Physics and World Understanding

Hailuo 2.3 also excels at understanding complex physics and world interactions. When challenged with a tricky prompt like “a man riding a unicycle and juggling red balls,” Hailuo 2.3 successfully generated the scene. While the unicycle remained stationary, the juggling motion was impressively accurate. In comparison, both Sora 2 and Veo 3.1 struggled with the same prompt, failing to create a convincing juggling animation.

This AI model also demonstrates a strong ability to understand and generate detailed scenes with multiple elements. With the prompt “A ballerina in a tutu practices spins in a sunlit studio with mirrored walls,” Hailuo 2.3 produced an anatomically correct and graceful animation, complete with all the specified details. It even included a rabbit watching from atop a grand piano and an elephant balancing on a circus ball outside the window. This level of detail and accuracy is a testament to its advanced world-understanding capabilities.

Start Creating with Hailuo 2.3 Today!

Ready to try it out for yourself? Hailuo 2.3 offers four free trials every day, making it easy to experiment and see what you can create. Just sign up, and you’ll receive free credits to unlock your AI video creation journey. Whether you’re a seasoned creator or just starting, Hailuo 2.3 is a powerful tool that’s worth exploring.

-

AI News & Updates9 months ago

AI News & Updates9 months agoDeepSeek R1-0528: The Ultimate Open-Source AI Challenger

-

AI How-To's & Tricks9 months ago

AI How-To's & Tricks9 months agoAI Video Generators: Discover the 5 Best Tools (Free & Paid!)

-

AI How-To's & Tricks8 months ago

AI How-To's & Tricks8 months agoFaceless AI Niches: 12 Ultimate Ideas to Dominate Social Media in 2025

-

AI How-To's & Tricks8 months ago

AI How-To's & Tricks8 months agoKling AI 2.0: An Incredible Leap? Our Exclusive Review & Tests

-

AI News & Updates9 months ago

AI News & Updates9 months agoClaude Opus 4: The Shocking Truth Behind Anthropic’s Most Powerful AI Yet

-

AI How-To's & Tricks9 months ago

AI How-To's & Tricks9 months agoGoogle Gemini for Language Learning: 3 Secret Tricks to Accelerate Your Progress.

-

AI News & Updates8 months ago

AI News & Updates8 months agoBohrium AI: The Ultimate Free Tool for Academic Research

-

AI How-To's & Tricks8 months ago

AI How-To's & Tricks8 months agoFree AI Video Generator: Discover The Ultimate Veo 3 Alternative