AI How-To's & Tricks

Kling AI 2.0: An Incredible Leap? Our Exclusive Review & Tests

The world of AI video generation is moving at a breakneck pace, and Kuaishou’s Kling AI has just thrown down the gauntlet with its latest release. This **Kling AI 2.0** review dives deep into the new model, which claims to be the best in the world. But does it live up to the hype? We’re putting it to the test against industry giants like Runway Gen-4 and Google VEO-2, exploring its powerful new features, and revealing the hidden costs and drawbacks you need to know about before you jump in.

In This Article

Kling AI 2.0 vs. The Competition: Head-to-Head Tests

To see if Kling AI 2.0 is truly “Best in the World,” we compared its output against Runway Gen-4 and Google’s VEO-2 using the same complex prompts. The results were revealing.

Challenge 1: Complex, Consecutive Actions (Parrot)

Prompt: “woman looks down at her hands as the camera follows her gaze, then a parrot gently lands on her hands”

This prompt tests the AI’s ability to understand sequential actions. Kling AI 2.0 absolutely nailed this. It perfectly followed the two-step instruction: the woman looks down first, then the parrot lands. Runway’s Gen-4 had the parrot on her hand from the start, failing the sequence. Google’s VEO-2 followed the prompt, but the actions felt unnaturally simultaneous rather than sequential. Kling was the clear winner in prompt adherence and natural timing.

Challenge 2: Environmental Effects (City Flood)

Prompt: “A massive flood hits the city as huge waves rush through the streets, flooding buildings and sweeping away cars”

Rendering large-scale, fluid dynamics is a massive challenge. Kling AI 2.0 delivered a spectacular and dynamic scene that matched the prompt perfectly, showing water filling streets and impacting the environment. Runway’s result was more like a single, overwhelming wave that simply obscured the camera. VEO-2 showed a flood, but it was far more static and less destructive, missing the “massive waves” and “sweeping cars” elements.

Challenge 3: High-Speed Action & Camera Motion (Knight)

Prompt: “A female knight charges into battle on a high-speed galloping horse as the camera circles around her in motion”

Kling’s output was incredibly dynamic, capturing the high-speed gallop effectively. While the facial coherence of the knight wavered slightly, the overall energy was fantastic. Runway Gen-4’s version looked more like slow-motion and lacked the high-speed intensity requested. Google’s VEO-2, unfortunately, produced a mostly unusable and static-looking scene where the horse’s gallop was far from high-speed.

Challenge 4: Zero-Gravity Dynamics (Floating Library)

Prompt: “Books and furniture float in zero gravity inside an old library as the camera flies overhead and tilts downward”

Kling AI 2.0 excelled here, creating a beautiful scene with both books and furniture levitating, and it correctly executed the “tilt downward” camera motion. Runway also managed floating objects but incorrectly interpreted the camera motion as a vertical move down, not a tilt. VEO-2 only rendered floating books, missing the furniture, and also failed to execute the specific camera tilt.

Challenge 5: The Ultimate Test (Samurai Fight)

Prompt: “Two samurai warriors fighting with katanas”

This is a notoriously difficult prompt for all AI video models due to object interaction. Kling AI 2.0 shows improvement, with more natural movements, but still struggles with sword coherence when they make contact, a common issue across all platforms. Runway’s output, however, was surprisingly dynamic and looked more like a genuine, active fight, making it a strong contender in this specific test.

Kling AI 2.0 vs. Kling 1.6: A Generational Leap?

The most important comparison is against its previous version. Is the upgrade significant?

Eagle Hunter Comparison

In a scene where a man sends an eagle flying, the improvement is in the nuance. In version 2.0, the man gives a slight, natural push to release the eagle. In version 1.6, the eagle’s flight feels more static and self-initiated. The new model adds a layer of physical realism to the interaction.

Running Wolf Comparison

The difference here is night and day. In Kling 1.6, the running wolf’s motion looks stilted and almost crippled. In Kling AI 2.0, the wolf’s gait is fluid, powerful, and natural. The camera also follows the motion perfectly, demonstrating a huge leap in rendering animal locomotion.

Exploring New Features: Multi-Elements & Kolors 2.0

Beyond the core model improvements, Kling also launched new tools for both video and image creation.

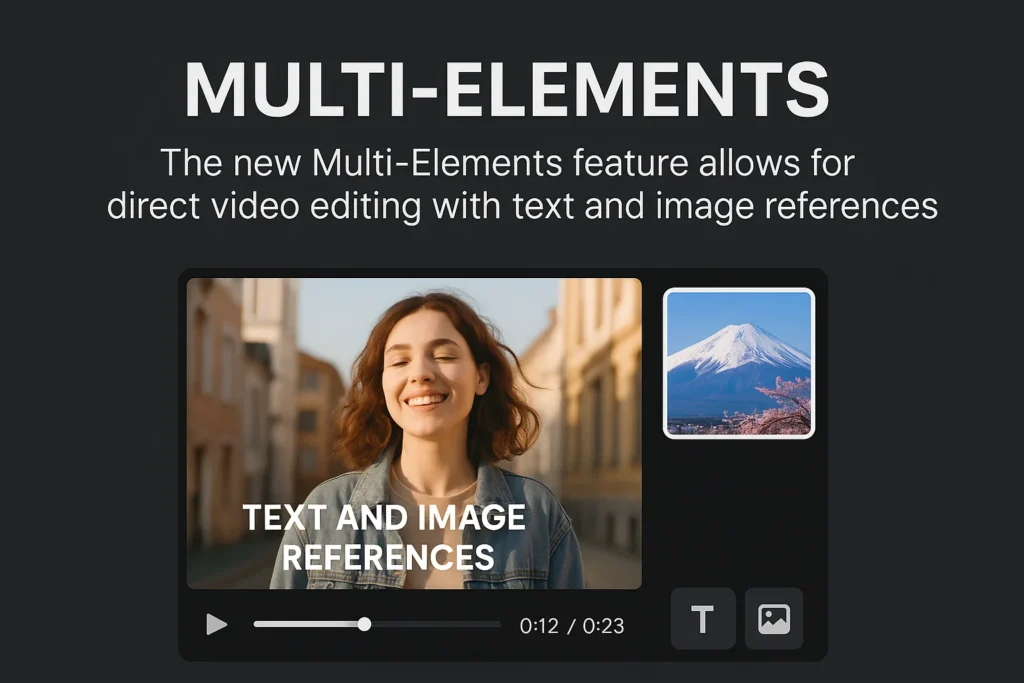

The “Multi-Elements” Video Editing Tool

Called “Multi-modal visual prompting,” this new feature lets you upload a video and use text prompts to add, delete, or swap elements within it. For example, you can upload a video and use a command like “Delete [parrot] from @ReferenceVideo” to remove an object. While incredibly promising, this feature is currently quite buggy and often fails to submit the task. It’s an exciting glimpse into the future but isn’t production-ready just yet. This is one of many new features we’re tracking in the world of AI News & Updates.

The colors 2.0 Image Model

Kling’s parent company also upgraded its text-to-image model, colors. Tests show it’s highly competitive with industry leaders like Midjourney and ChatGPT’s DALL-E 3, especially in prompt adherence and generating complex scenes. However, its ability to maintain character consistency using face references seems to have regressed slightly compared to its previous version, an area that still needs refinement.

The Verdict: The Staggering Cost & Time of Kling AI 2.0

Now for the two elephants in the room: time and money. The results from Kling AI 2.0 are often stunning, but they come at a price.

- Generation Time: Be prepared to wait. A single 5-second clip can take upwards of 30-40 minutes to generate, likely due to overloaded servers from the new launch.

- Cost: This is the biggest drawback. Generating a single 5-second video costs 100 credits. This is a significant price increase and makes the tool very expensive for regular use, especially with no unlimited plan announced.

This pricing model is a major barrier and the most disappointing aspect of this otherwise powerful release. Hopefully, as they hint, a cheaper version will be released soon. [For a deeper dive into other AI tools, check out our AI Tools & Reviews section.]

Is Kling AI 2.0 the New King?

Yes and no. When it comes to prompt understanding, complex sequential actions, and dynamic motion, Kling AI 2.0 often produces results superior to its current rivals. The leap from version 1.6 is undeniable, showcasing massive improvements in realism and physics.

However, the platform is hampered by long generation times, buggy new features, and a prohibitively expensive credit system. While it has the potential to be the king, its accessibility and cost-effectiveness are major hurdles it must overcome. For now, it’s an incredible piece of technology that offers a tantalizing preview of the future of AI storytelling.

AI How-To's & Tricks

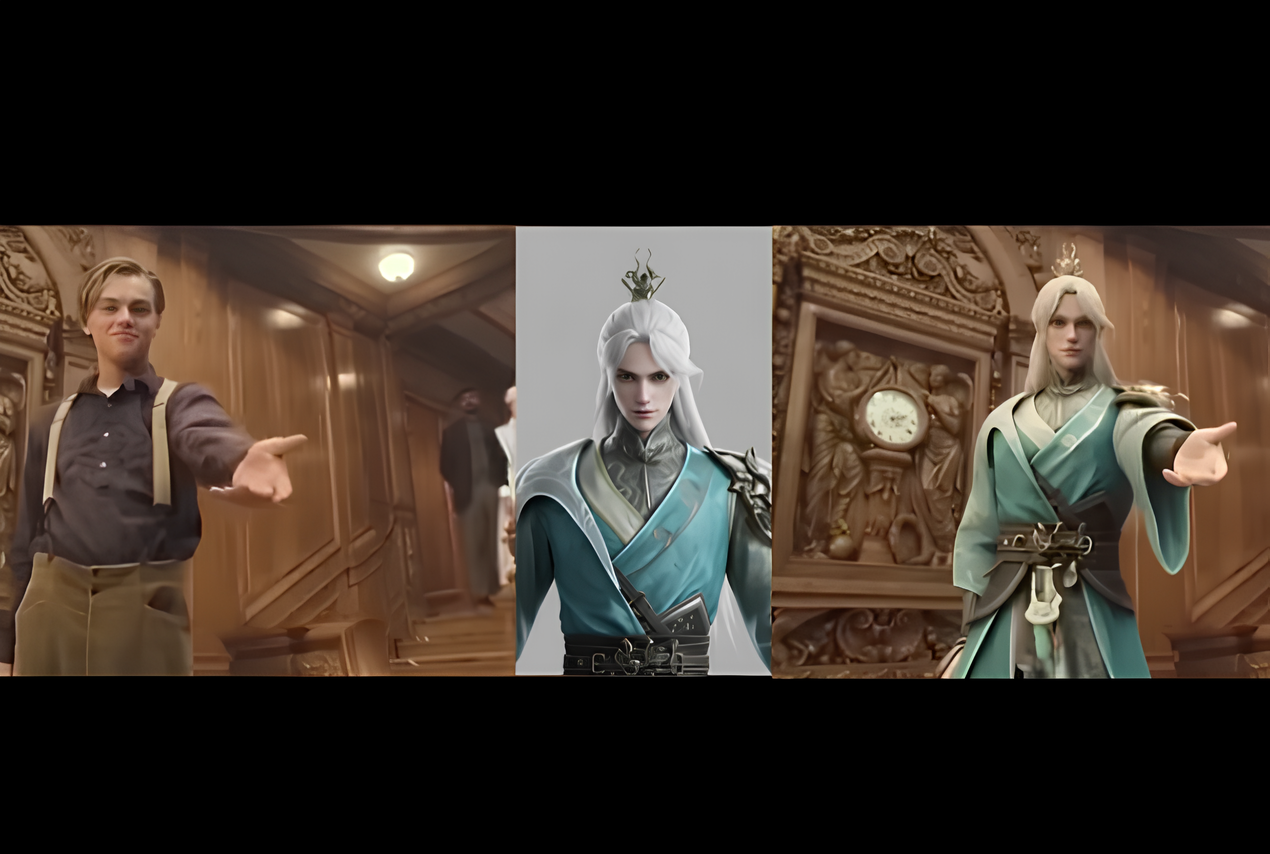

MoCha AI: The Ultimate Guide to Flawless Video Character Swaps

Ever wondered if you could take a scene from your favorite movie and seamlessly swap out the main character for someone entirely new, just using a single reference image? Thanks to the incredible advancements in generative AI, this is no longer science fiction. In this guide, we’ll explore the amazing capabilities of MoCha AI, a free and open-source tool that offers end-to-end video character replacement with stunning accuracy.

Developed by the “Orange Team,” MoCha AI is a powerful new framework that stands out for its ability to create high-quality, consistent character replacements without needing complex structural guidance. Let’s dive into what makes it so special and how you can use it yourself.

- What is MoCha AI and What Can It Do?

- How MoCha AI Stacks Up Against Competitors

- Getting Started: How to Install and Use MoCha AI with ComfyUI

- Final Thoughts: The Future of AI Video Editing

What is MoCha AI and What Can It Do?

MoCha AI is a free, open-source AI tool designed to replace any character in an existing video using just a single reference image of a new character. Its advanced model is capable of capturing and transferring complex motions with incredible detail. The key features include:

- Full Body Motion Transfer: It perfectly matches the movements of the original character, including subtle hand gestures and body language.

- Facial & Lip Sync Fidelity: The new character’s facial expressions and lip movements are synchronized with the original audio and performance.

- Seamless Integration: MoCha AI excels at matching the white balance, lighting, and colors of the original video. This ensures the new character blends into the scene naturally, avoiding the “pasted-on” look that other tools can produce.

- Intelligent Segmentation: The tool is smart enough to identify and replace only the target character, leaving other elements like background scenery and even subtitles completely untouched.

How MoCha AI Stacks Up Against Competitors

While similar tools like Wan Animate and Kling also offer character animation, the video highlights several areas where MoCha AI demonstrates superior performance. In side-by-side comparisons, MoCha consistently produces more realistic and better-integrated results.

The primary advantage is its ability to preserve the original scene’s color and lighting. In several examples, including a tricky scene with a moving lightbulb, MoCha’s output looks far more natural. The character feels like they are truly in the environment, whereas results from other models can appear washed out or poorly lit.

Furthermore, MoCha AI handles unconventional characters, like those wearing masks, much more effectively. In one test, Wan Animate failed to generate the masked character properly, while MoCha inserted it seamlessly, retaining all the details from the reference photo.

For those interested in exploring other powerful video manipulation tools, check out our comprehensive reviews in the AI Tools & Reviews category.

Getting Started: How to Install and Use MoCha AI with ComfyUI

The easiest way to run MoCha AI locally is through ComfyUI, a popular node-based interface for generative AI models. The video uses a custom wrapper node that makes the process straightforward.

Step 1: Install the WanVideoWrapper for ComfyUI

This entire workflow runs on the “ComfyUI-WanVideoWrapper,” a custom node developed by user Kijai. If you haven’t already, you need to install it in your ComfyUI’s custom_nodes folder. You can do this by cloning the repository from GitHub.

Once cloned, you’ll need to install its dependencies. If you use the portable version of ComfyUI, you can run the following command in your ComfyUI_windows_portable folder:

python_embedded\python.exe -m pip install -r ComfyUI\custom_nodes\ComfyUI-WanVideoWrapper\requirements.txt

Step 2: Download the Necessary Models

MoCha requires several models to function correctly. The workflow file handily includes the links, but here’s what you need:

- The MoCha AI Model: The original model is quite large. Thankfully, there is a quantized FP8 version available which is smaller (around 14.3 GB) and works well for consumer GPUs. Download this and place it in your

ComfyUI/models/diffusion_models/folder. - VAE & Text Encoder: You’ll also need the Wan2.1 VAE and a UMT5 text encoder. Place the VAE in the

ComfyUI/models/vae/folder and the text encoder in theComfyUI/models/text_encoders/folder. - (Optional) LightX2v Model: To dramatically speed up generation, it’s highly recommended to download the LightX2v LoRA model. This can reduce the required steps from 20-30 down to just 6. Place this file in the

ComfyUI/models/loras/folder.

Step 3: Set Up the Workflow

Once all models are downloaded and placed in the correct folders, restart ComfyUI. Drag and drop the MoCha workflow JSON file onto the interface to load it. Now you can start setting up your generation!

- Load Your Video: In the “Load Reference Video” node, upload the video you want to edit.

- Create a Mask: The first step is to generate a segmentation mask to tell the AI which character to replace. The workflow guides you through this; you use green dots to select parts of the character and red dots to select parts of the background (or objects to exclude). This helps create an accurate mask.

- Load Your Reference Image: Upload the image of the new character you want to insert into the video. For best results, use an image with a clean, simple background.

- Generate! With everything set up, you can run the workflow. The MoCha AI will process the video frame by frame, replacing the original character with your new one while matching the motion and lighting.

The process can take some time depending on your hardware and video length, but the results are truly impressive, capturing everything from hand gestures to reflections on surfaces.

To dive deeper into the technical aspects or contribute to the project, you can visit the official MoCha AI GitHub page.

Final Thoughts: The Future of AI Video Editing

MoCha AI represents a significant leap forward for open-source character replacement tools. Its ability to create seamless, high-fidelity results opens up a world of creative possibilities for filmmakers, content creators, and AI enthusiasts. By leveraging the power of ComfyUI, it’s more accessible than ever to experiment with this cutting-edge technology right on your own computer. This is without a doubt one of the best character transfer tools available today.

AI How-To's & Tricks

Mobile AI Habits: 5 Essential Secrets to Master Your Phone

Are you truly making the most of the powerful AI in your pocket? While most people use apps like ChatGPT on their phones, their workflow is often clunky, inefficient, and anything but seamless. The constant cycle of copying, pasting, saving, and uploading creates unnecessary friction. This article will transform how you interact with AI on your smartphone by introducing you to five essential mobile AI habits. These simple yet powerful techniques, inspired by productivity expert Jeff Su, are designed to reduce friction and integrate AI so naturally into your daily routine that it becomes an extension of how you think and work.

By mastering these workflows, you’ll move beyond treating AI as a novelty and start leveraging it as a powerful, on-the-go productivity partner.

Habit #1: Drag to Ask — The Ultimate Workflow Hack

The first and most transformative mobile AI habit is mastering the cross-app drag-and-drop feature. This functionality has been available on both Android and iPhone for some time but remains a vastly underutilized secret for AI productivity.

Instead of the tedious process of saving an image or copying text to your clipboard, you can simply long-press an item with one finger and use a second finger to swipe into your AI app of choice. This allows you to drop content directly into a chat for instant analysis.

Real-World Use Cases:

- Analyze Complex Images: Encounter a graph with tiny font? Long-press the image, drag it into ChatGPT, and ask it to summarize the data and transcribe the text for you.

- Review Documents on the Fly: Need to quickly check the terms of a contract while on a call? Drag the PDF from your files app into ChatGPT and ask specific questions to get instant answers without manually searching.

- Recreate Spreadsheets: Take a screenshot of a table or spreadsheet, long-press the screenshot preview, and drag it into ChatGPT. You can then ask it to “recreate this table and let me download as .csv,” turning a static image into an editable file in seconds.

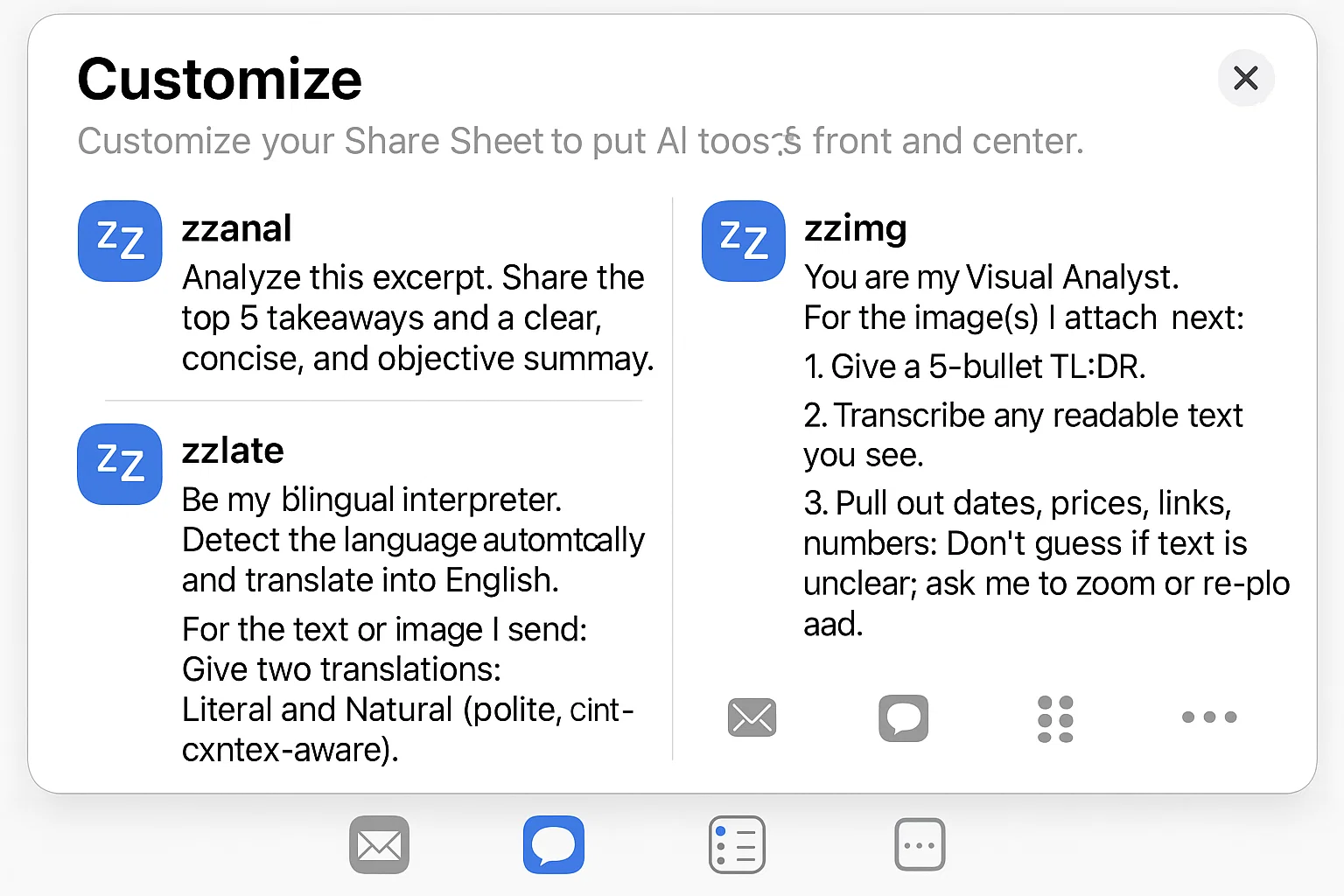

Habit #2: Instant Content Analysis (The Share Sheet Hack)

What about apps that don’t support drag-and-drop, like many non-native applications? The solution lies in optimizing your system’s Share Sheet. This is another crucial mobile AI habit that ensures you can send content to an AI from anywhere with just two taps.

By default, AI apps are often buried in the share menu. You can easily customize this to place your favorite AI tools—like ChatGPT, Gemini, or Perplexity—right at the front for immediate access.

How to Set It Up:

- For iPhone Users: Tap the “Share” icon in any app, scroll to the far right of the app list, and tap “More.” In the next menu, tap “Edit,” find your preferred AI apps under “Suggestions,” and tap the green plus icon to add them to your “Favorites.” You can then drag them to the top of the list.

- For Android Users: The process is even simpler. When you open the Share Sheet, just long-press on an AI app icon and choose the option to “Pin” it to the top of your share menu.

This simple change dramatically speeds up workflows like summarizing articles from your web browser or analyzing attachments from an email.

Habit #3: The Prompt Snippet Keyboard

Typing long, detailed prompts on a mobile keyboard is tiring and inefficient. The third mobile AI habit is to create a “prompt snippet keyboard” using your phone’s built-in Text Replacement feature.

This allows you to type a short, simple keyword that automatically expands into a complete, well-structured prompt. To avoid accidentally triggering your prompts, it’s a good practice to use an unusual prefix for your shortcuts, like “zz.”

How to Set It Up & Examples:

- Go to your phone’s settings and search for “Text Replacement.”

- Create a new replacement. In the “Phrase” field, paste your detailed prompt.

- In the “Shortcut” field, enter your short keyword (e.g., “zzimg”).

Here are a few powerful examples to get you started:

- Shortcut: zzanal

Phrase: Analyze this excerpt. Share the top 5 takeaways and a clear, concise, and objective summary. - Shortcut: zzimg

Phrase: You are my Visual Analyst. For the image(s) I attach next: 1. Give a 5-bullet TL;DR. 2. Transcribe any readable text you see. 3. Pull out dates, prices, links, numbers. Don’t guess if text is unclear; ask me to zoom or re-upload. - Shortcut: zzlate

Phrase: Be my bilingual interpreter. Detect the language automatically and translate into English. For the text or image I send: Give two translations: Literal and Natural (polite, context-aware). Preserve names, addresses, and formatting.

Habit #4: Pocket Voice Command Launcher

Ideas can strike at any moment, especially when you’re on the move. Instead of fumbling to open an app and type, you can create an instant voice-to-text launcher. This is perfect for capturing thoughts, brainstorming, or asking quick questions hands-free.

On the iPhone, this is easily achieved by mapping the Action Button to a specific ChatGPT shortcut.

How to Set It Up on iPhone:

- Go to Settings > Action Button.

- Swipe to the “Shortcut” option.

- Tap the button below and search for ChatGPT.

- From the list of options, select “Start dictation.”

Now, a single press of the Action Button will launch a new ChatGPT conversation and immediately begin transcribing your voice, making it the perfect tool for capturing fleeting ideas while walking or commuting.

Habit #5: The Smart Context Commander

The final habit is the most advanced, turning your Share Sheet into a powerful, context-aware command center. Using the iOS Shortcuts app, you can create a tool that presents a menu of custom AI actions whenever you share text with it. This allows you to apply different prompts to the same piece of text without any extra typing.

How It Works:

- You select a block of text from an article, email, or note.

- You tap “Share” and select your custom “AI Actions” shortcut.

- A menu appears with options you’ve created, such as “Quick summary,” “Draft a reply,” or “ELI5” (Explain Like I’m 5).

- When you choose an option, the shortcut copies both the pre-defined prompt for that action and your selected text to the clipboard.

- It then opens ChatGPT, where you can simply paste the combined prompt and context to get your desired result.

This setup combines the simplicity of the Share Sheet with the power of custom prompts, making it one of the most versatile mobile AI habits for any power user.

AI How-To's & Tricks

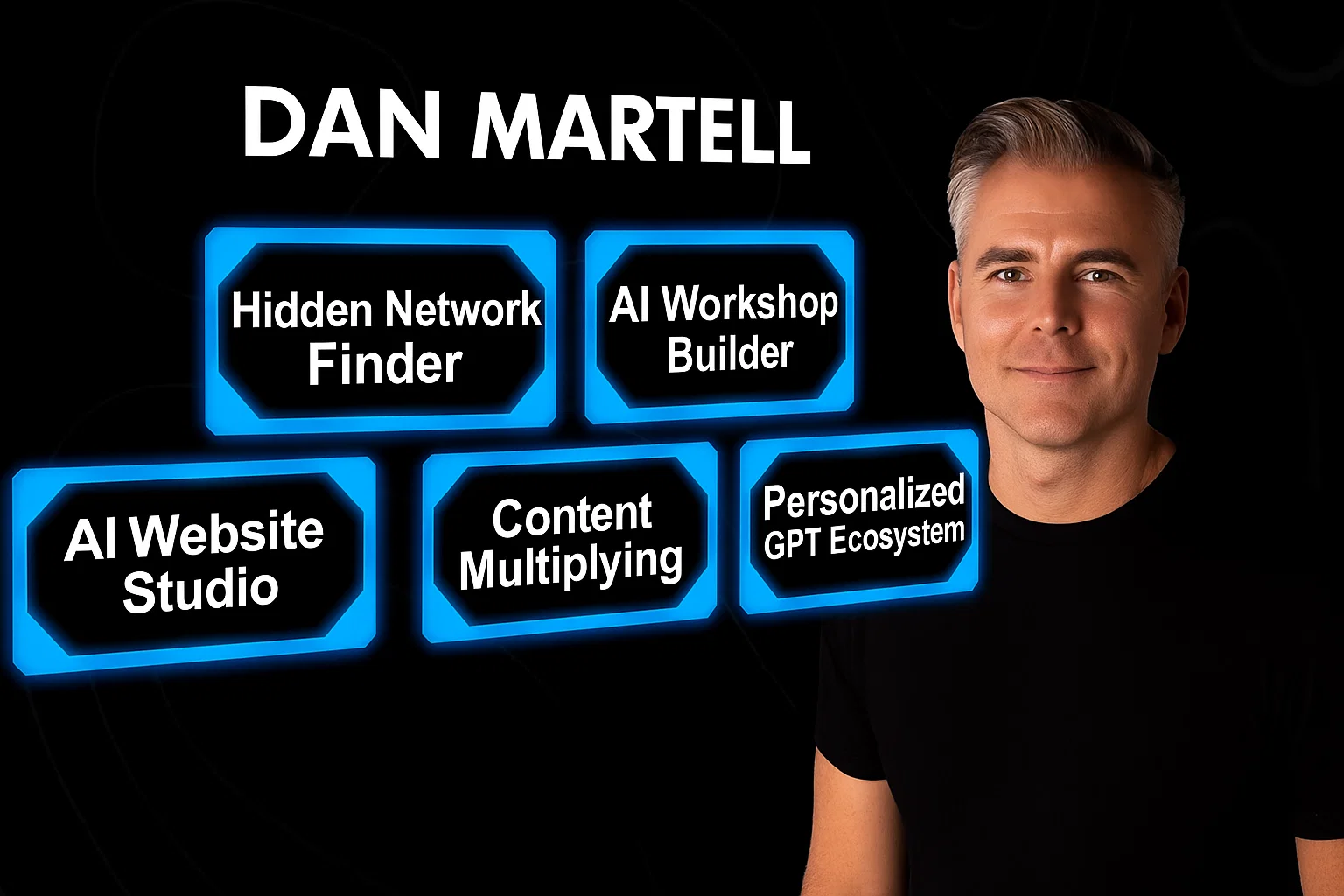

Make Money With AI: 5 Essential Genius Strategies for 2025

Are you looking for proven ways to make money with AI but feel like you need to be a technical expert? According to AI entrepreneur and investor Dan Martell, that couldn’t be further from the truth. With the rapid advancement of artificial intelligence, the barrier to entry for creating profitable AI-driven businesses and services has never been lower. Martell, whose AI company portfolio is on track for a billion-dollar valuation, insists that you don’t need to be a coding genius to generate thousands, if not millions, of dollars. This article breaks down five of his most powerful, genius strategies that anyone can start using today.

1. Personalized GPT Ecosystems for High-Value Clients

One of the most lucrative opportunities to make money with AI is by selling services to high-net-worth individuals and successful business owners. Martell points out that while these leaders hear buzzwords like “Custom GPTs,” “system prompts,” and “master prompts,” they are often too busy and confused to implement them effectively. They know they need AI, but they don’t have the time to figure it out. This is where you come in.

You can become the go-to expert who sets up a personalized GPT ecosystem for them. This service helps busy executives streamline their workflow, automate tasks, and organize their digital world. They are more than willing to pay a premium for someone to come in and build this custom AI infrastructure for them.

How to Build a Personalized GPT Ecosystem in 4 Steps:

- Create an Intake Form: Start by gathering all necessary information from your client. This includes their preferences, workflow details, communication tone, and business goals. The more context you collect, the better you can tailor the AI system.

- Build the Key Pieces: Use the information from the intake form to construct the core components of their AI system. This involves creating master prompts for different life contexts, system prompts for specific outputs, custom GPTs, and organizing their work into logical project folders.

- Generate a Standard Operating Procedure (SOP): Upload all the documents and prompts you’ve created into ChatGPT and ask it to write a step-by-step playbook. This SOP will guide the client on how to use their new personalized system effectively.

- Set It Up: Finally, get access to the client’s ChatGPT account and implement everything. Organize and label all the components so the system is clear, intuitive, and easy for them to use without confusion.

Pro Tip: Martell suggests charging a monthly subscription-based maintenance fee. Since AI technology is constantly evolving with updates every few months, you can provide ongoing support, tweaks, and updates to their system, creating a recurring revenue stream.

2. The AI Workshop Builder

If you have expertise in a specific area—whether it’s marketing, fitness, or quitting a 9-to-5—you can make money with AI by packaging your knowledge into a sellable workshop. Many people feel they aren’t “certified experts,” but Martell argues that if you’ve achieved a result that others desire, you are qualified to teach it. The fear of not being an expert stops most people, but all you need is to be a few steps ahead of your audience.

AI can help you build the entire workshop from scratch. It can interview you to extract your knowledge, structure the curriculum, write the scripts, create the outlines, and even develop relevant exercises and examples for your target audience. You simply need to provide the core expertise, and AI will handle the instructional design.

Pro Tip: Ask your AI to structure your workshop using the 4MAT model, an adult learning theory that breaks down information into four key questions: Why? What? How? and What If? This makes the content more engaging and easier for people to learn.

Learn more about AI’s role in education in our AI Technology Explained section.

3. AI Website Studio

In the past, building a professional website required extensive coding knowledge. Today, AI-powered tools like Lovable and Framer allow you to create beautiful, responsive, pixel-perfect websites in minutes without writing a single line of code. This opens up a massive opportunity to start an AI Website Studio.

The entire process can be automated. A client pays, fills out a detailed intake form (which AI can help you create), and that information is fed via an API to a tool like Lovable, which generates the website. The client can then provide feedback through another form, which AI uses to make revisions automatically. Martell notes that some entrepreneurs are already making $10 million a year with fully automated website-building businesses.

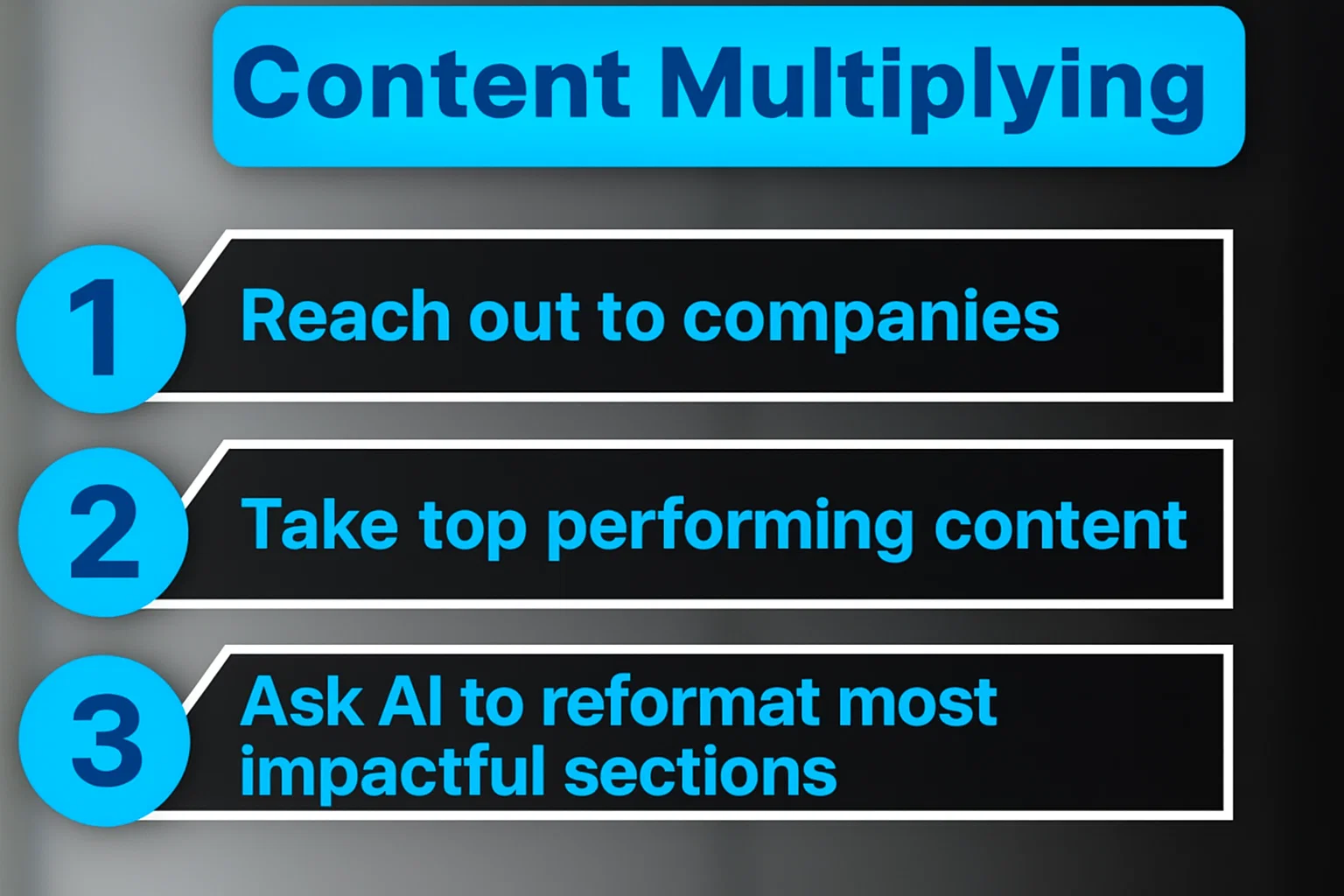

4. Content Multiplying

Many businesses and creators have a wealth of long-form content, like podcasts and YouTube videos, but lack the time to repurpose it for other platforms. You can offer a service to “multiply” their content, turning one long video into dozens of short-form clips, carousels, and memorable tweets for platforms like TikTok, Instagram, and X (formerly Twitter).

How to Multiply Content with AI:

- Find Companies with Existing Content: Reach out to businesses that are already producing long-form content but have a weak presence on short-form platforms.

- Identify Top-Performing Content: Analyze their existing content to find the pieces with the most views and engagement.

- Transcribe and Reformat: Transcribe the top-performing content and use AI to pull out the most impactful sections, reformatting them for different social media platforms.

Pro Tip: Use the PSL (Point, Story, Lesson) framework. Ask AI to find segments in the content that make a clear point, tell a compelling story, and deliver a valuable lesson. This structure performs incredibly well on social media.

5. The Hidden Network Finder

Your network is your net worth, but most people are sitting on a hidden goldmine of connections. An incredible way to make money with AI is by using it to analyze your existing network—your email contacts, social media followers, and professional connections—to uncover valuable, hidden opportunities.

Tools like Social Sweep can connect to your accounts (Gmail, Instagram, LinkedIn, etc.), enrich that data with public information, and perform an AI analysis to identify potential clients, strategic partners, suppliers, or media opportunities. For example, you could ask it: “Who in my network lives in Austin, hosts a podcast in person, and is interested in entrepreneurship?” The AI can generate a targeted list and even help you craft the perfect, personalized outreach message for each person.

By using AI to systematically mine your network, you can connect the dots and unlock opportunities that were previously invisible, turning dormant contacts into profitable relationships.

Explore how network analysis works on platforms like LinkedIn.

-

AI News & Updates7 months ago

AI News & Updates7 months agoDeepSeek R1-0528: The Ultimate Open-Source AI Challenger

-

AI How-To's & Tricks7 months ago

AI How-To's & Tricks7 months agoAI Video Generators: Discover the 5 Best Tools (Free & Paid!)

-

AI News & Updates7 months ago

AI News & Updates7 months agoClaude Opus 4: The Shocking Truth Behind Anthropic’s Most Powerful AI Yet

-

AI How-To's & Tricks7 months ago

AI How-To's & Tricks7 months agoFaceless AI Niches: 12 Ultimate Ideas to Dominate Social Media in 2025

-

AI How-To's & Tricks7 months ago

AI How-To's & Tricks7 months agoGoogle Gemini for Language Learning: 3 Secret Tricks to Accelerate Your Progress.

-

AI News & Updates7 months ago

AI News & Updates7 months agoBohrium AI: The Ultimate Free Tool for Academic Research

-

AI How-To's & Tricks7 months ago

AI How-To's & Tricks7 months agoFree AI Video Generator: Discover The Ultimate Veo 3 Alternative

-

AI News & Updates6 months ago

AI News & Updates6 months agoWeekly AI News: Ultimate Reveal of Shocking AI Updates