The recent launch of the Cursor plugin marketplace is a significant development in the field of artificial intelligence, enabling users to extend the capabilities of AI agents with external tools. As reported by FutureTools News, this innovative platform is set to transform the way AI agents are used in various industries. The plugin marketplace is designed to provide users with a wide range of tools and services that can be seamlessly integrated with AI agents, enhancing their functionality and performance.

Introduction to Cursor Plugin Marketplace

The Cursor plugin marketplace is an online platform that allows developers to create, share, and deploy plugins for AI agents. These plugins can be used to add new features, improve existing ones, or even create entirely new applications. With the launch of this marketplace, Cursor is providing a unique opportunity for developers to showcase their skills and creativity, while also contributing to the growth of the AI ecosystem. As mentioned on the Cursor blog, the plugin marketplace is an essential component of the company’s strategy to make AI more accessible and user-friendly.

Benefits of the Plugin Marketplace

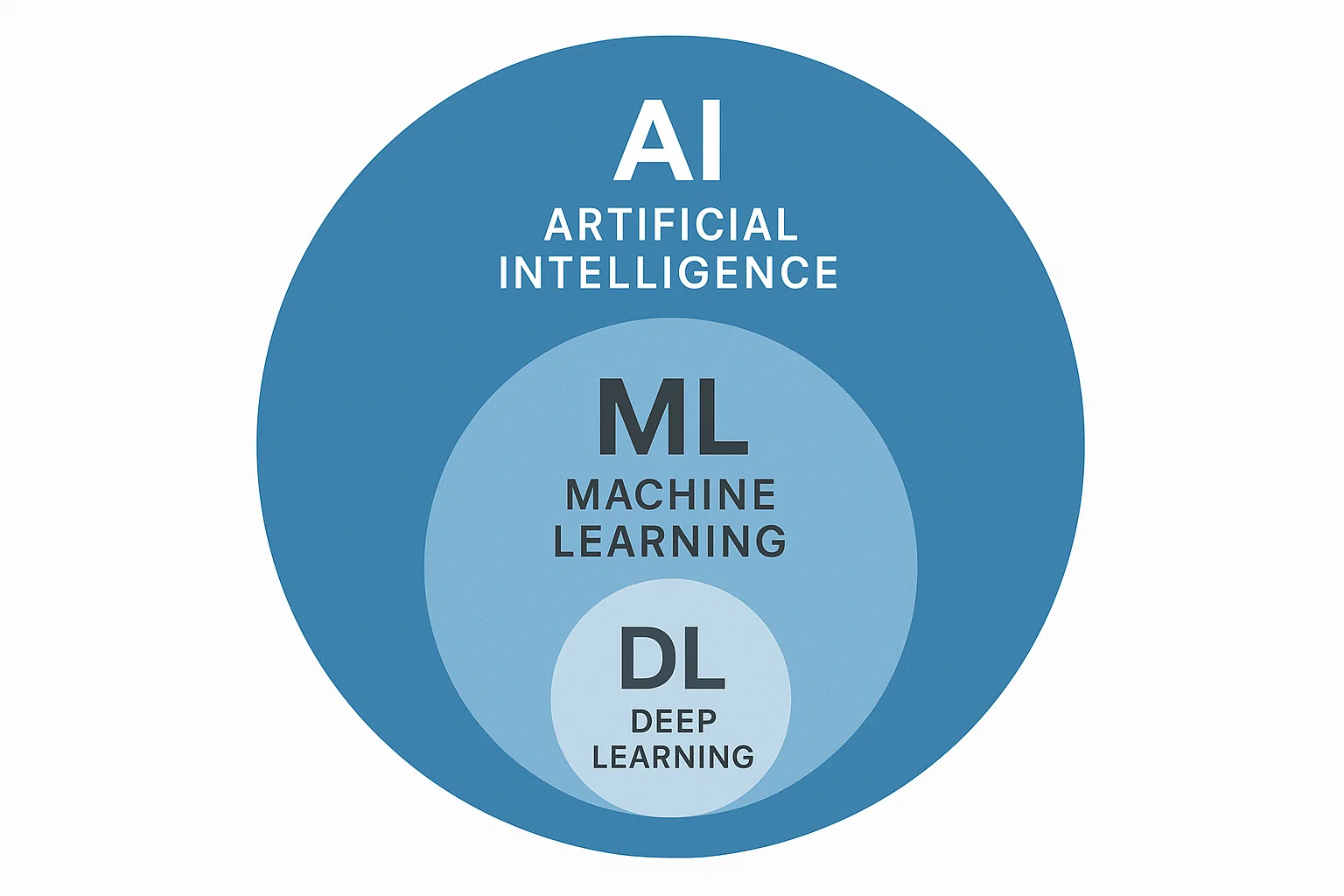

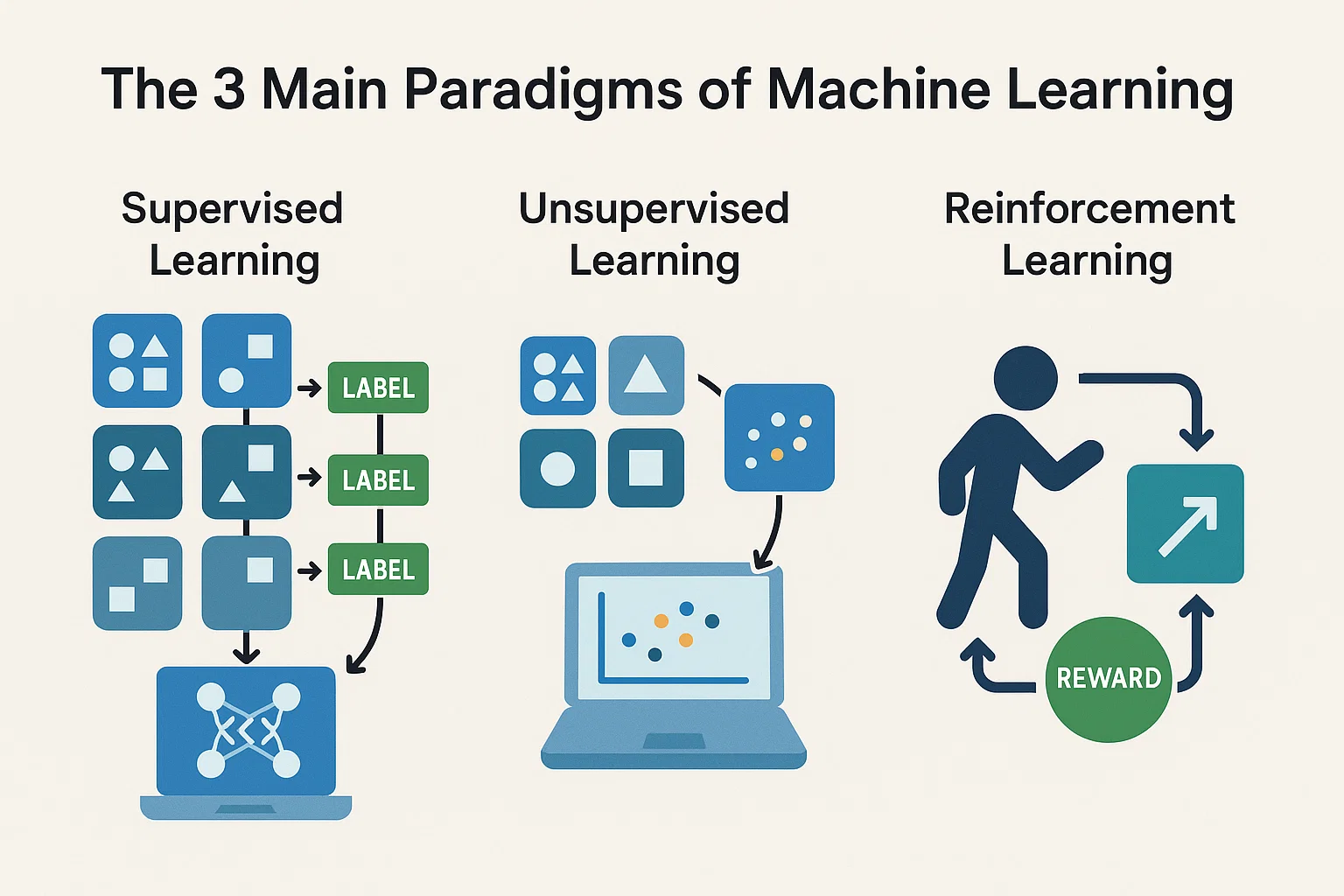

The Cursor plugin marketplace offers several benefits to users, including the ability to extend the capabilities of AI agents, improve their performance and efficiency, and enhance their overall user experience. By providing access to a wide range of plugins, the marketplace enables users to tailor their AI agents to meet specific needs and requirements. This can be particularly useful in industries such as customer service, healthcare, and finance, where AI agents are increasingly being used to automate tasks and improve decision-making. As noted by experts in the field, the use of machine learning and natural language processing can significantly enhance the capabilities of AI agents.

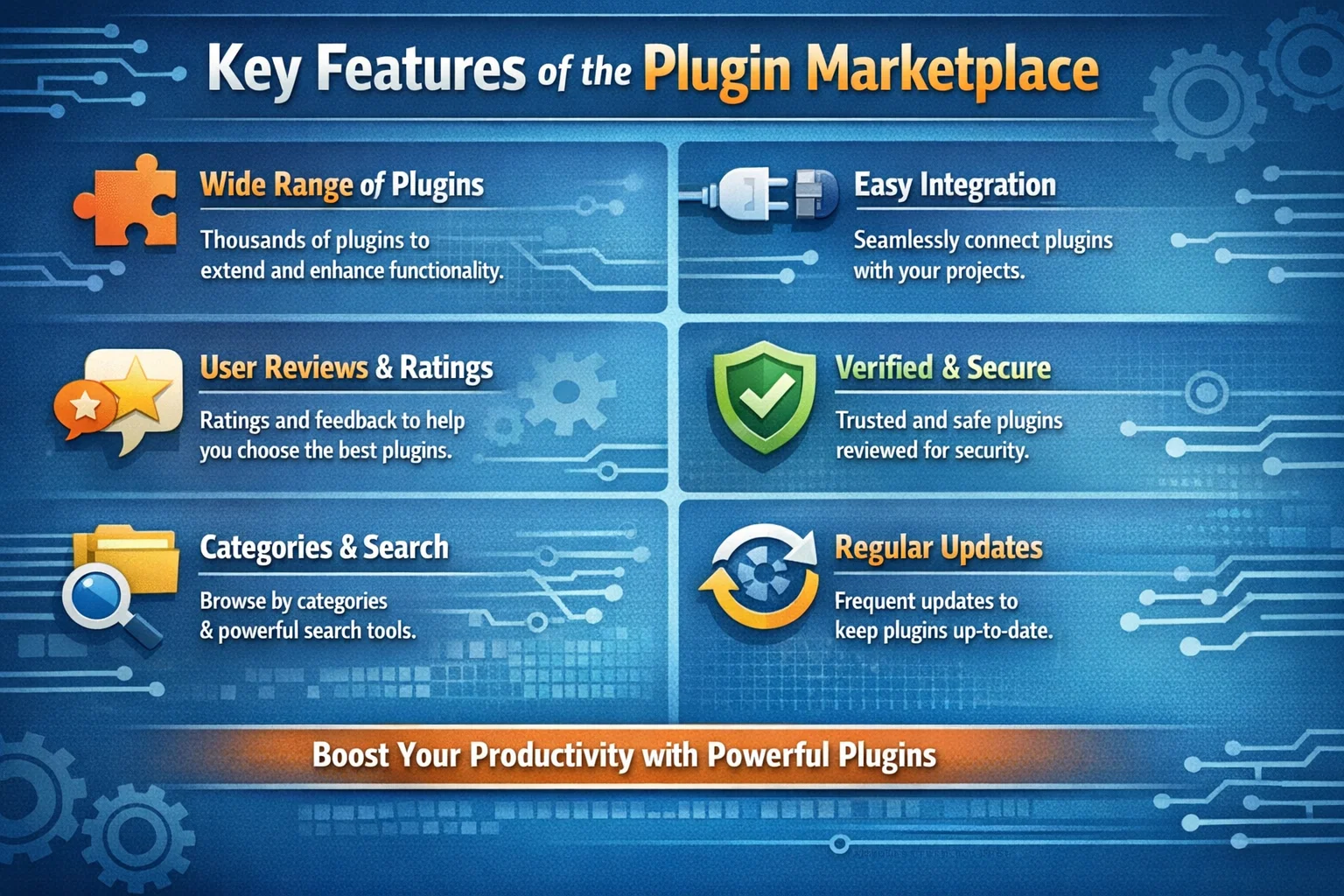

Key Features of the Plugin Marketplace

The Cursor plugin marketplace features a user-friendly interface, making it easy for developers to create, deploy, and manage plugins. The platform also provides a range of tools and services, including APIs, SDKs, and documentation, to support plugin development. Additionally, the marketplace includes a review and rating system, allowing users to evaluate and compare plugins based on their quality, functionality, and performance. As stated by the GitHub community, the use of open-source plugins can significantly accelerate the development of AI applications.

The launch of the Cursor plugin marketplace is a significant milestone in the development of AI agents, and we are excited to see the innovative plugins that will be created by our community of developers. – Cursor Team

Future of AI Agents and Plugin Marketplaces

The launch of the Cursor plugin marketplace is a clear indication of the growing importance of AI agents and plugin marketplaces in the technology industry. As AI continues to evolve and improve, we can expect to see more innovative applications and use cases emerge. The use of cognitive services and conversational AI can significantly enhance the capabilities of AI agents, enabling them to interact more effectively with humans and perform complex tasks. As reported by FutureTools News, the future of AI agents and plugin marketplaces looks promising, with significant opportunities for growth and innovation.