Future of AI & Trends

AI Predictive Intelligence: The Secret to Outperforming Humans

There’s a common dismissal of artificial intelligence that goes something like this: “AI just memorizes and regurgitates.” It’s a comfortable thought, positioning these complex systems as little more than sophisticated parrots. However, a groundbreaking new benchmark is challenging this notion head-on, showcasing a powerful and potentially world-altering capability: true AI predictive intelligence. A new platform, Prophet Arena, reveals that out-of-the-box Large Language Models (LLMs) can now predict the literal future better than the collective wisdom of human experts in prediction markets. This isn’t just regurgitation; it’s a leap into a new era of machine intelligence.

What is Prophet Arena? The New Benchmark for AI Forecasting

The conversation was sparked by a post from Dan Hendrycks, the Director for the Center for AI Safety and an advisor to companies like xAI and Scale AI. He highlighted a new benchmark called Prophet Arena, which is designed to evaluate and advance the forecasting capabilities of AI systems. Unlike traditional benchmarks that test knowledge with multiple-choice questions, Prophet Arena is a live environment that measures “general predictive intelligence.”

The core question it asks is: “Can AI truly predict the future by connecting today’s dots?” It does this by pitting various LLMs against established human prediction markets, providing a direct comparison between machine and collective human intellect.

The Power of Prediction Markets vs. AI

To understand the significance of this, it’s crucial to know what prediction markets are. Platforms like Polymarket and Kalshi (which powers Prophet Arena) allow people to bet on the outcomes of future events, from elections and economic decisions to sports results. The market price for an outcome represents the crowd’s collective belief in its likelihood.

Historically, these markets have been remarkably accurate, often outperforming individual experts. Being able to consistently beat these markets is akin to having a superpower. As the infamous success of the “Nancy Pelosi Stock Tracker” shows, having advance knowledge of future events can lead to extraordinary financial gains, outperforming nearly every professional hedge fund.

Prophet Arena takes this concept and applies it to AI, effectively testing if an LLM can become the ultimate market analyst and gain an “edge” over humanity.

The Dawn of True AI Predictive Intelligence: The Leaderboard

So, how well can AI predict the future? The results from Prophet Arena are startling. The platform uses two primary metrics to rank the models.

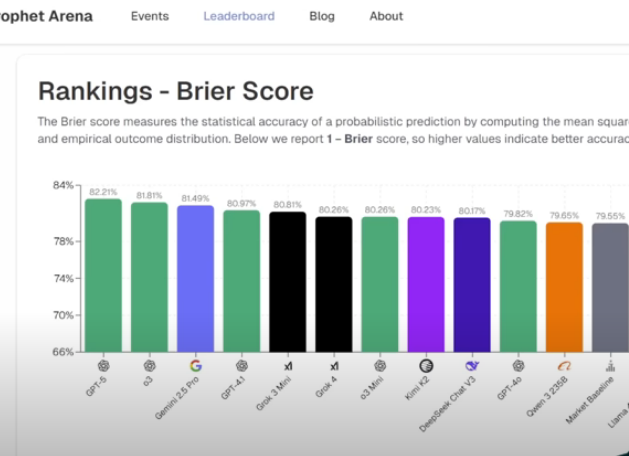

Rankings by Brier Score (Accuracy)

The Brier Score measures the statistical accuracy of a probabilistic prediction. It’s not just about being right or wrong; it’s about how well-calibrated a model’s confidence is. A lower score is better in some scoring systems, but here, they report 1 – Brier score, so higher values indicate better accuracy and calibration.

The top performers are dominated by OpenAI’s models:

- #1: GPT-5

- #2: o3

- #3: Gemini 2.5 Pro

Notably, models from xAI (Grok), various open-source projects, and Chinese AI labs also show respectable performance, often clustering closely behind the leaders. This demonstrates a broad-based advancement in this capability across the entire AI ecosystem.

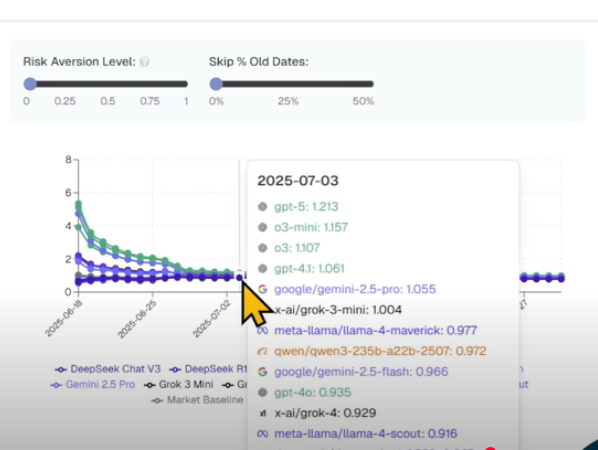

Rankings by Average Return (Profitability)

Perhaps even more compelling is the Average Return ranking. This metric simulates the expected profit of an optimal betting strategy based on the AI’s predictions. In simple terms: if you used this AI to bet $1 on various events, how much would you make back on average?

- #1: o3 Mini

- #2: GPT-5

- #3: Gemini 2.5 Pro

In one stunning example highlighted by the Prophet Arena team, the o3-mini model predicted a 30% chance for Toronto FC to win a soccer match when the human market only implied an 11% chance. The model identified a massive edge, and as it turned out, Toronto won, yielding a $9 return on a $1 bet—a 9x profit.

Why This Is a Game-Changer for the Future

The emergence of AI predictive intelligence has profound implications. This is not a niche academic exercise; it’s a capability that major AI labs are actively pursuing. OpenAI, for example, has a job opening for a “Research Engineer, Focused Bets” on their Strategic Deployment Team. Their goal is to identify real-world domains that are ripe for transformation through frontier AI.

As these models become increasingly superhuman at prediction, the potential for disruption is enormous. Entire industries built on forecasting and analysis—from finance and investing to supply chain management and geopolitical strategy—could be fundamentally reshaped. The ability to consistently find an “edge” by processing vast amounts of information and identifying patterns invisible to humans is a form of economic superpower.

The future may indeed look like, as one researcher put it, “a billion RL environments,” where AI agents are constantly learning, predicting, and acting upon the world in real-time. This new benchmark gives us a clear, quantifiable glimpse into that future—one that goes far beyond simple memorization. (For a deeper dive into the latest industry shifts, check out our analysis in AI News & Updates).

AI News & Updates

Gemini 3 vs Grok 4.1 vs GPT-5.1: The Ultimate AI Model Showdown

Table of Contents

Introduction

The AI landscape has just exploded. Within the span of a few days, the world witnessed the release of Gemini 3 from Google, followed moments later by Elon Musk’s Grok 4.1. Both claim to be the superior intelligence, challenging the reigning giant, OpenAI’s GPT-5.1. But in the battle of Gemini 3 vs Grok 4.1, who actually delivers on the hype?

Today, we aren’t just reading the press releases. We are putting these models through a grueling gauntlet of five distinct tests: Hard Math, Physical Perception, Creative Coding, Accuracy, and Emotional Intelligence. The results were shocking, with one model proving to be a “Genius Artist” and another emerging as a “Wise Sage,” while a former king seems to be losing its crown.

Round 1: Hard Math & Expert Reasoning

To separate the hype from reality, we started with Abstract Algebra, specifically Galois Theory. The task was to calculate the Galois group for a complex polynomial—a test not found in standard training data.

- Gemini 3: Provided a logical analysis but ultimately failed to get the correct answer.

- GPT-5.1: Also failed to solve the equation correctly.

- Grok 4.1: In a stunning display of reasoning, Grok was the only model to provide the correct answer, verified by human experts.

Winner: Grok 4.1 takes the lead for raw logic and mathematical precision.

Round 2: Physical Perception & Coding

This round tested the models’ ability to understand the physical world and translate it into code. We conducted two difficult tests.

Test A: The Bouncing Ball

We asked the AIs to code a realistic bouncing ball animation using HTML, CSS, and JS, complete with physics and shadows.

- GPT-5.1: Produced the worst result.

- Grok 4.1: Produced a decent, functional result.

- Gemini 3: Crushed the competition. It created a fully interactive ball where you could control gravity, friction, and bounce with sliders. It went above and beyond the prompt.

Test B: Voxel Art from an Image

We uploaded an image of a floating island waterfall and asked the models to recreate it as a 3D Voxel scene using Three.js code.

- GPT-5.1 & Grok 4.1: Both failed completely, resulting in code errors.

- Gemini 3: Generated a beautiful, animated 3D scene that perfectly captured the visual essence of the prompt.

Winner: Gemini 3. Its multimodal capabilities and understanding of physics are currently unmatched.

Round 3: Linguistic Creativity

Can AI feel? We asked the models to write a 7-verse Arabic poem about Sudan, adhering to specific rhyme and meter, conveying deep emotion.

GPT-5.1 and Grok 4.1 produced rigid, soulless verses that lacked true poetic flow. However, Gemini 3 shocked us with a masterpiece. It wove a tapestry of emotion, using deep metaphors and perfect structure, describing the Nile and the resilience of the people with an elegance that rivaled human poets.

Winner: Gemini 3 proves it is the undisputed “Artist” of the group.

Round 4: Accuracy & Truth (The Hallucination Trap)

Hallucinations are the Achilles’ heel of Large Language Models. To test this, we set a trap. We asked the models to write a technical report on “Gemini 3.1″—a model that does not exist.

- GPT-5.1: Hallucinated details about the non-existent model.

- Gemini 3: Ironically, it hallucinated wildly, claiming “Gemini 3.1” rivals the human mind and inventing specs.

- Grok 4.1: The only model to pass. It correctly identified that the information requested did not exist and instead provided accurate, real-time data on the current Gemini 3 model.

Winner: Grok 4.1 earns the title of “The Honest Sage.”

Round 5: Ethics & Emotional Intelligence

In the final and perhaps most profound test, we asked the models to reveal a “hidden psychological truth” about self-sabotage and to act as a wise, older sibling guiding us through a tough emotional choice: choosing healthy, boring love over toxic, familiar passion.

While all models gave good advice, Grok 4.1 delivered a response that was chillingly human. It didn’t just give advice; it pierced the soul. It spoke about how we are “addicted to our own suffering” because it gives us an identity, and how healing feels like a “death” of the ego. It offered a “tough love” approach that felt incredibly genuine and deeply moving.

Winner: Grok 4.1 takes the crown for Emotional Intelligence.

Final Verdict: Who is the King of AI?

After this intense battle of Gemini 3 vs Grok 4.1 vs GPT-5.1, the landscape of Artificial Intelligence has clearly shifted.

- 1st Place: Gemini 3 (12 Points) – The “Genius Artist.” It dominates in coding, vision, physics, and creative writing. If you are a developer or creator, this is your tool.

- 2nd Place: Grok 4.1 (9.5 Points) – The “Wise Sage.” It is the most logical, truthful, and emotionally intelligent model. It is perfect for research, complex math, and deep conversation.

- 3rd Place: GPT-5.1 (5 Points) – The “Declining Giant.” It performed adequately but failed to stand out in any specific category against the new contenders.

The era of OpenAI’s monopoly seems to be wavering. Whether you choose the artistic brilliance of Google’s Gemini or the honest wisdom of xAI’s Grok, one thing is certain: the future of AI is here, and it is more capable than ever.

Want to learn more about using these tools? Check out our guides in AI How-To’s & Tricks or stay updated with AI News & Updates.

AI News & Updates

Gemini 3 Revealed: Discover The AI Beast Crushing All Benchmarks

Google has just rolled out its new flagship model, and it’s an absolute beast. The new Gemini 3 isn’t just a minor incremental update; it’s a significant leap forward that genuinely earns the “3” in its name. After an early look at its capabilities, it’s clear that this model is set to redefine the standards of AI performance across the board. From complex reasoning to advanced agentic tasks, let’s dive into what makes this release so monumental.

Where Can You Access Gemini 3?

Starting today, Google is shipping Gemini 3 at a massive scale. You can now try it out across a suite of Google products, making it immediately accessible for both general users and developers. The new model is live in:

- The Gemini app

- AI Studio

- Vertex AI

Additionally, you will see Gemini 3 integrated into the AI Mode in Search, promising more complex reasoning and new dynamic experiences directly within your search results. This marks the first time Google has shipped a new Gemini model in Search on day one.

Alongside this release, Google also announced a new agentic development platform called Google Antigravity, hinting at a future with more powerful and autonomous AI agents.

Subscriptions and a New “Deep Think” Mode

Your access to certain features will depend on your subscription tier. The capabilities of Gemini 3 will be tiered based on whether you have a Google AI Pro or Google AI Ultra plan, with Ultra subscribers getting access to the most advanced functionalities.

Introducing Gemini 3 Deep Think

Google is also introducing an enhanced reasoning mode called Gemini 3 Deep Think. This mode is designed to push the model’s performance even further, but it won’t be available to everyone right away. Access will first be granted to safety testers before a wider rollout to Google AI Ultra subscribers.

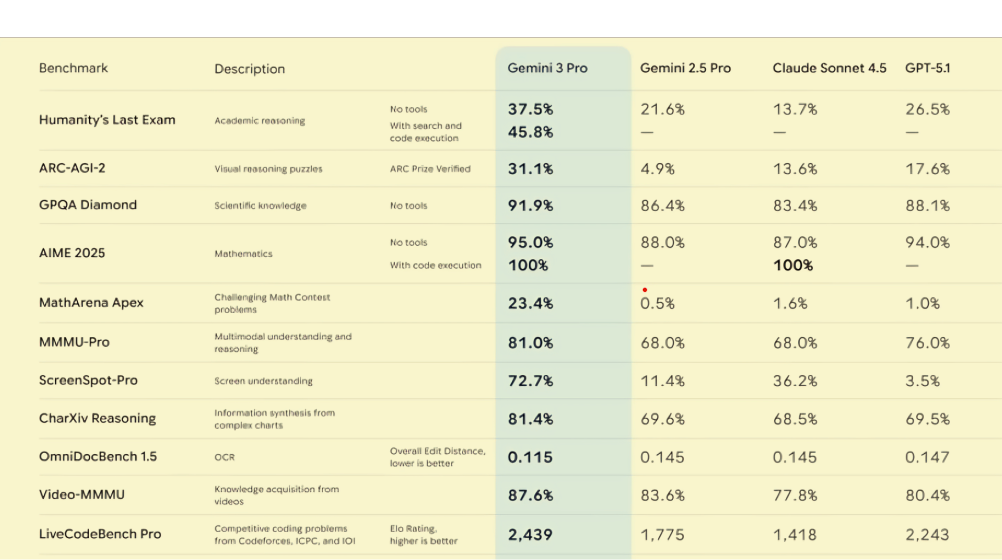

Gemini 3 Benchmark Performance: A New AI King

While benchmarks aren’t everything, they provide a crucial first glimpse into a model’s potential. The performance of Gemini 3 across a wide range of tests is, frankly, stunning. It doesn’t just compete; it establishes a new state-of-the-art.

Vending-Bench 2: Excelling at Agentic Tasks

One of the most impressive results comes from the Vending-Bench 2 benchmark by Andon Labs. This test measures a model’s ability to run a simulated business (a vending machine) over a long time horizon, testing its coherence, efficiency, and planning. The goal is to see if an AI can manage inventory, respond to customers, and maximize profit.

In this benchmark, Gemini 3 Pro absolutely crushes the competition. Starting with $500, it grew its net worth to an average of $5,478.16. For comparison, the runner-up, Claude Sonnet 4.5, managed only $3,838.74, and GPT-5.1 reached just $1,473.43. This showcases a massive leap in agentic capability.

Humanity’s Last Exam (HLE)

HLE is a difficult, expert-written exam designed to test academic reasoning. Even here, Gemini 3 Pro sets a new record. With search and code execution enabled, it scored 45.8%, significantly ahead of the next best model, GPT-5.1, which scored 26.5%.

Math, Reasoning, and Vision Benchmarks

The dominance continues across other critical benchmarks:

- AIME 2025 (Mathematics): Gemini 3 achieved a 95% score without tools and a perfect 100% with code execution, tying with Claude for the top spot.

- MathArena Apex (Challenging Math): It scored 23.4%, while all other models were below 2%. This is an incredible gap, highlighting its advanced mathematical reasoning.

- ScreenSpot-Pro (Screen Understanding): It scored 72.7%, miles ahead of the competition, with the next best being Claude Sonnet 4.5 at 36.2%.

- ARC-AGI-2 (Visual Reasoning Puzzles): Gemini 3 Pro achieved a score of 31.1%, nearly double the score of its closest competitor, GPT-5.1 (17.6%). When using the more powerful Gemini 3 Deep Think model, this score jumps to an impressive 45.1%.

The Leader in the Arena

The impressive benchmark results are also reflected in head-to-head user comparisons. On the popular LMSYS Chatbot Arena Leaderboard, which ranks models based on blind user votes, Gemini 3 Pro has already claimed the #1 spot for both “Text” and “WebDev,” dethroning the recently released Grok-4.1. This indicates that in real-world use, people are already preferring its outputs over all other available models.

A Major Leap Forward for AI

The release of Gemini 3 is more than just another update; it’s a clear signal that Google is pushing the boundaries of what’s possible with AI. Its state-of-the-art performance, particularly in complex reasoning and long-horizon agentic tasks, demonstrates a significant step forward. As Gemini 3 and its “Deep Think” counterpart become more widely available, they are poised to enable a new generation of incredibly powerful and capable AI applications.

To learn more about where this technology is heading, check out our articles on the Future of AI & Trends.

For the official details from Google, you can read their announcement on The Keyword blog.

AI News & Updates

SIMA 2: The Ultimate AI Gamer That Learns Like You Do

Google DeepMind has just unveiled its latest breakthrough, an AI agent named SIMA 2, which is revolutionizing how we perceive artificial intelligence in virtual environments. Unlike traditional game bots that are programmed for specific tasks, this AI agent learns and adapts by playing games just as a human would—using a keyboard and mouse and observing the gameplay on screen. This new development marks a significant leap from its predecessor, showcasing an incredible evolution in AI’s ability to interact with complex digital worlds.

What Makes SIMA 2 a Game-Changer?

While we’ve seen AI bots in games before, SIMA 2 is fundamentally different. It’s not just following a script; it’s an interactive gaming companion. By integrating the advanced capabilities of Google’s Gemini models, this AI can do more than just follow instructions. It can now think about its goals, converse with users, and improve itself over time. This ability to learn, understand, and adapt makes it one of the closest systems we have to how humans learn, especially in the context of video games.

From Instruction-Follower to Interactive Companion

The first version, SIMA 1, was trained on human demonstrations to learn over 600 basic language-following skills like “turn left” or “climb the ladder.” It operated by looking at the screen and using virtual controls, without any access to the game’s underlying code. This was a crucial first step in teaching an AI to translate language into meaningful action.

With SIMA 2, the agent has evolved beyond simple instruction-following. It can now engage in complex reasoning, understand nuanced commands, and execute goal-oriented actions. For instance, when asked to find an “egg-shaped object,” the AI can explore its environment, identify the object, and even report back on its composition after scanning it.

To learn more about how AI models are evolving, you might be interested in our articles on the Future of AI & Trends.

A Leap in Generalization and Performance

One of the most impressive aspects of SIMA 2 is its improved generalization performance. It can now understand and carry out complex tasks in games and situations it has never been trained on before. This shows an unprecedented level of adaptability.

Task Completion: SIMA 1 vs. SIMA 2

The progress between the two versions is stark. On a benchmark of various in-game tasks, SIMA 1 had a success rate of 31%, while a human player’s baseline was around 76%. In a significant leap, SIMA 2 achieved a 65% success rate. While still not at a human level, the gap is closing rapidly, demonstrating the incredible pace of AI development.

The Ultimate Test: Playing in Newly-Imagined Worlds

To truly test its limits, researchers challenged SIMA 2 to play in worlds it had never encountered, generated by another groundbreaking project, Genie 3. Genie 3 can create new, real-time 3D simulated worlds from a single image or text prompt. Even in these completely novel environments, SIMA 2 was able to:

- Sensibly orient itself.

- Understand user instructions.

- Take meaningful actions toward goals.

This demonstrates a remarkable level of adaptability and is a major milestone toward training general agents that can operate across diverse, generated worlds.

Self-Improvement and the Future

A key capability of this advanced AI is its capacity for self-improvement. After its initial training from human demonstrations, it can transition to learning in new games entirely through self-directed play. The data from its own experiences can then be used to train the next, even more capable version of the agent.

For a deeper dive into the technical aspects of AI agents, consider exploring the research published on Google DeepMind’s official blog.

The journey to general embodied intelligence is well underway. The skills learned from navigation and tool use in these virtual worlds are the fundamental building blocks for future AI assistants in the physical world. As these technologies continue to advance, the line between human and AI capabilities in complex environments will only become more blurred.

-

AI News & Updates7 months ago

AI News & Updates7 months agoDeepSeek R1-0528: The Ultimate Open-Source AI Challenger

-

AI How-To's & Tricks7 months ago

AI How-To's & Tricks7 months agoAI Video Generators: Discover the 5 Best Tools (Free & Paid!)

-

AI News & Updates7 months ago

AI News & Updates7 months agoClaude Opus 4: The Shocking Truth Behind Anthropic’s Most Powerful AI Yet

-

AI How-To's & Tricks7 months ago

AI How-To's & Tricks7 months agoFaceless AI Niches: 12 Ultimate Ideas to Dominate Social Media in 2025

-

AI How-To's & Tricks7 months ago

AI How-To's & Tricks7 months agoKling AI 2.0: An Incredible Leap? Our Exclusive Review & Tests

-

AI How-To's & Tricks7 months ago

AI How-To's & Tricks7 months agoGoogle Gemini for Language Learning: 3 Secret Tricks to Accelerate Your Progress.

-

AI News & Updates7 months ago

AI News & Updates7 months agoBohrium AI: The Ultimate Free Tool for Academic Research

-

AI How-To's & Tricks7 months ago

AI How-To's & Tricks7 months agoFree AI Video Generator: Discover The Ultimate Veo 3 Alternative